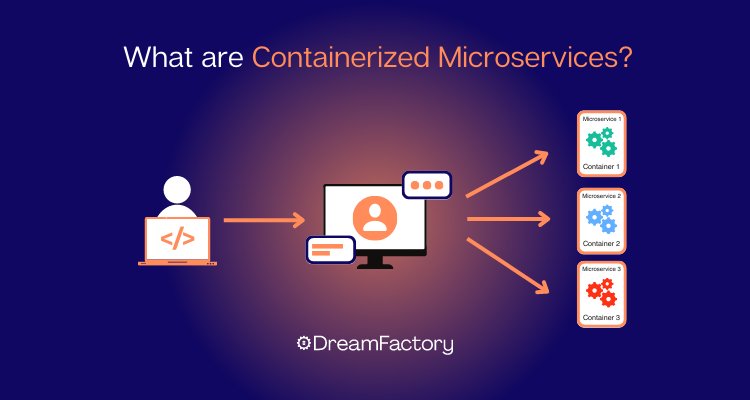

Containerized microservices are essential to cloud migration and digital transformation plans. Do you have a clear picture of what containerized microservices are and why they’re important?

In this guide, you'll learn about containers, microservices, and how they work together. First up is a bit of history that explains how the need for containers came to be.

Here's the key things to know about containerized microservices:

- Containers virtualize multiple application runtime environments on the same operating system instance, providing isolation and portability.

- Containerized microservices offer reduced overhead, increased portability, faster application development, and easier adoption of a microservices architecture.

- Challenges in containerized microservices include container orchestration, service discovery and load balancing, network complexity, data consistency and synchronization, monitoring and observability, security and access control, and DevOps and continuous delivery.

- Container orchestration tools like Kubernetes and Docker Swarm help address the challenges of managing and automating containerized microservices.

- Strategies such as synchronous and asynchronous communication, service discovery mechanisms, API gateways, message queues, and event-driven architectures can ensure effective communication and coordination between microservices.

What Are Containerized Microservices?

Containers – or containerized environments – let you virtualize multiple application runtime environments on the same operating system instance (or, to be more technically precise, on the same kernel).

As the popular choice for running microservices applications, containers achieve operating system virtualization by “containing” only what’s required by an application for autonomous operation. Containers include executables, code, libraries, and files, and package them all as a single unit of execution. From the perspective of the containerized microservice, it has its file system, RAM, CPU, storage, and access to specified system resources. It doesn’t even know it’s in a container!

Unlike a virtual machine (VM), containers don’t need to contain a separate operating system image. This makes containers lightweight and portable and significantly reduces the overhead required to host them. Thanks to this efficiency, containers give you more virtual runtime environments for the money spent. They offer dramatically faster start-up time as well as they don’t have to spin up an operating system when they initiate.

Being able to run multiple containers on a single OS kernel is valuable when constructing a cloud-based microservices architecture. Compared to virtual machines, you can run three times as many containers on a single server (or on a single virtual machine). This choice saves a tremendous amount of resources and server costs.

How the Limitations of Virtual Machines Led to Containers

Before there were containers, there were virtual machines (VMs). Organizations have used VMs since the 1960s to run multiple operating system instances on the same server. VMs let you isolate applications from each other for greater stability without investing in another physical server for each instance.

In those aspects, VMs can save you money and resources. The problem is that a VM still uses a lot of system resources. That’s because VMs make a virtual copy of everything – including the operating system and emulation of hardware it needs to run. That’s fine if you have enough unused server resources to support all the VMs you need.

But if you’re running VMs in the cloud, you’re probably not seeing any cost savings due to the resources you are using. Spinning up additional VMs requires buying more cloud RAM, clock time, and operating system licenses. The high costs of running many virtual machines in the cloud are a top reason many are switching to “container” technology. It’s a lighter-weight, faster, and more cost-effective alternative.

How Containerized Microservices Work

A great way to introduce how containerized microservices work is to describe the other strategies for running microservices (and explain what’s wrong with them):

- Each microservice runs on its own physical server with its own operating system instance: This approach keeps the microservices isolated from each other, but it’s wasteful. Modern servers have the processing power to handle multiple operating system instances, so a separate physical server for each microservice isn’t necessary.

- Multiple microservices run on one operating system instance on the same server: This is risky because it doesn’t keep the microservices autonomous from each other. There is an increased chance of failures caused by conflicting application components and library versions. A problem with one microservice can lead to failure cascades that interrupt the operation of others.

- Multiple microservices run on different virtual machines (VM) on the same server: This provides a unique execution environment for each microservice to run autonomously. However, a VM replicates the operating system instance, so you’ll pay to license a separate OS for each VM. Also, running an entirely new OS is an unnecessary burden on system resources.

- Running microservices in containers with their necessary executables and libraries means that each microservice operates autonomously with reduced interdependency on the others. Moreover, multiple containers can run on a single OS instance, which eliminates licensing costs and reduces system resource burdens.

The Benefits of Containers

Since containers have come into wide use, sysadmins and IT leaders alike have enjoyed the many benefits of containers. Here's a review of their characteristics and the value they bring to the enterprise:

Reduced overhead

Without the need for an operating system image, containers use fewer system resources than virtual machines. This translates into more efficient server utilization and no additional OS licensing costs.

Increased portability

Containerized microservices and apps are easy to deploy on the widest variety of platforms and operating systems. Run them on a PC, Mac, laptop, or smartphone, and they will operate consistently.

Faster application development

By dividing a monolithic application into a network of containerized, independently-running microservices, application development is faster and easier to organize. Small teams can work more granularly on different parts of an application to develop the highest quality code without the risk of coding conflicts. This results in faster deployment, patching, and scaling.

Easier adoption of a microservices architecture

Containers have become the de facto choice for adopting a microservices architecture because they’re less expensive and less process heavy compared to other strategies.

Smaller than virtual machines, containers require less storage space. In many cases, they are 10% or less the size of a VM.

Faster startup than virtual machines

Containers start up in seconds – sometimes milliseconds – because they bypass the need for a VM to lumber through the process of spinning up the operating system each time it launches. Faster container initiation times offer an improved app user experience.

Tools for Containerized Microservices

The most well-known tools for building and managing containerized microservices are Docker and Kubernetes. They automate the process of using Linux cgroups and namespaces to build and manage containers.

Docker focuses on creating containers, while Kubernetes focuses on container orchestration. Commonly abbreviated as CO, container orchestration is the automatic process of managing the work of individual containers for applications based on microservices within multiple clusters.

Here's a detailed comparison of these two popular tools for containerized microservices.

Docker

Docker was released in 2013 as the first large-scale, open-source containerization solution. Built to make Linux container features such as cgroups and namespaces more accessible and easier to use, the Docker platform has since become synonymous with containers and containerized microservices.

Soon after Docker’s release, forward-thinking enterprises began using the platform to build containerized runtime environments. This helped support their cloud migration, digital transformation, and microservices efforts. Docker-built containers can immediately reduce cloud-compute overhead expenses for an enterprise by replacing VMs with containers.

Docker’s most popular benefits include:

- Built-in security: Containerizing an app with Docker automatically reduces the chances of an app outside the container running remote code. This offers built-in application security without needing to code any audits.

- Scalability: After running a web-based microservice in a Docker container, the application can run anywhere. Users can access the microservice via their smartphones, tablets, laptops, or PCs. This significantly reduces your deployment and scalability challenges.

- Reliable deployment infrastructure: Docker’s hard-coded deployment infrastructure with version control ensures that everyone on your development team stays on the same page.

- Options: Docker’s premium Enterprise edition offers powerful extensions, a choice of tools and languages, and globally consistent Kubernetes environments. The enterprise edition also includes commercial phone support.

Kubernetes

Kubernetes is another name that has become synonymous with containerization. It is a container orchestration tool that helps developers run and support containers in production. The open-source container workload platform lets you manage a group of containerized microservices as a “cluster.” Within a Kubernetes cluster, you can distribute available CPU and memory resources according to the requirements of each containerized service. You can also move containers to different virtual hosts according to their load.

Working together with Docker, Kubernetes wraps containers in “pods.” Then it automates container deployment and loads balancing with the following features:

- Automatic bin-packing: Kubernetes packages your microservices or applications into containers and automatically assigns available resources to the containers based on their requirements.

- Service discovery and load balancing: Kubernetes automatically configures IPs and ports, and manages traffic for your containers.

- Storage orchestration: Kubernetes allows you to mount whatever kind of storage system you want to use, whether it’s cloud-based or on-premises.

- Automatic “healing” to manage container crashes: Kubernetes automatically spins up and deploys an identical container on a different node to replace containers that crash or fail.

- Autoscaling: Kubernetes includes a variety of features that automatically deploy new containers. They can expand the resources of container clusters and manage the resources assigned to containers within clusters. These automatically trigger according to preset thresholds to ensure overall system stability.

Kubernetes is a highly portable solution. They are now supported by the biggest cloud servers. These include Google Cloud Engine (GCE), Amazon Web Services (AWS), and Microsoft Azure Container Service. Private cloud providers – like OpenStack, Microsoft, Amazon, and Google – have also incorporated Kubernetes container services.

Two popular alternatives to Kubernetes include:

- Docker Swarm: Swarm is a container orchestration tool native to the Docker ecosystem. Swarm is a simpler solution for teams that don’t require the advanced features of Kubernetes. Because of its simplicity, Swarm facilitates faster container deployment in response to new demands.

- Apache Mesos: Mesos is an open-source container orchestration tool similar to Kubernetes. Unlike Kubernetes, Mesos orchestrates other types of applications in addition to containerized ones. That’s why Mesos calls its commercial product DC/OS (Data Center Operational System). As Dorothy Norris on Stratoscale points out, “Mesos understands that …we should use the best tools for each particular situation.”

Common Containerized Microservices Challenges

Containerized microservices also come with their own set of challenges. Here are some common challenges that organizations may face when they start adopting containerized microservices:

- Container Orchestration: Managing a large number of containers and coordinating their deployment, scaling, and networking can be complex. Container orchestration tools like Kubernetes help streamline this process, however they require a significant investment in learning and infrastructure setup.

- Service Discovery and Load Balancing: As the number of microservices increases, it becomes crucial to have efficient service discovery mechanisms to locate and communicate with each service. Load balancing is also essential to distribute incoming traffic across multiple instances of a microservice. Implementing robust service discovery and load balancing strategies can be challenging in containerized environments.

- Network Complexity: Microservices communicate with each other over a network, and managing the network architecture can be complicated. Containerized microservices often span multiple containers and hosts, requiring careful configuration and security considerations to ensure proper communication and data flow between services.

- Data Consistency and Synchronization: In a distributed microservices architecture, maintaining data consistency and synchronization across services can be challenging. Each microservice may have its own data store or database, and ensuring data integrity and coherence can be complex. Implementing proper data synchronization strategies, such as event-driven architectures or distributed transaction management, becomes crucial in containerized microservices.

- Monitoring and Observability: With a large number of microservices running in containers, monitoring and observability become essential for maintaining system health and diagnosing issues. Collecting and analyzing logs, metrics, and traces from various containers and services can be challenging without proper monitoring tools and strategies in place.

- Security and Access Control: Containerized microservices introduce additional security considerations. Protecting containerized environments from vulnerabilities, securing inter-service communication, managing access controls, and ensuring data privacy are critical challenges to address. Proper security measures, such as implementing authentication, authorization, and encryption mechanisms, are necessary to mitigate risks.

- DevOps and Continuous Delivery: Adopting containerized microservices often requires a shift in the development and deployment process. Organizations need to embrace DevOps practices and establish robust CI/CD pipelines to automate the building, testing, and deployment of containerized microservices. This cultural and operational shift can be a challenge, requiring teams to adapt their workflows and adopt new tools and processes.

Using Containerized Microservices: Today and Tomorrow

Containerized microservices have already enabled many of the apps and features people use daily. The abstraction of applications, APIs, and other development roles from the hardware layer has enabled tremendous growth for the container concept. Of course, technology never sits still and new trends emerge all the time. Here's a look at some of the most exciting concepts that build on the ideas of containers and microservices:

DevOps

DevOps is the merger of traditional development and IT operations teams. DevOps practices place the focus on collaboration across the entire IT organization. Key components of a DevOps organization are automated and standardized deployments, freeing staff from repetitive tasks. The speed of standard deployments that containers offer makes DevOps and containerized microservices a perfect match.

AIOps

Artificial Intelligence Operations, or AIOps, is the practice of bringing AI into the world of IT. Identifying system failures or security breaches has traditionally been a time-consuming process. The use of cloud and distributed containerization enables AI to solve these problems. AIOps can help in the identification and prevention of IT issues, along with automating security monitoring and intrusion detection.

Low-Code APIs

Low-code is a software development concept based on the idea of creating applications using a simple drag-and-drop UI. Emerging right along with this trend are low-code APIs, which are application frameworks that allow developers to build full-featured apps visually and with a minimal amount of writing code. Industry pundits and analysts are already pointing toward low-code APIs as the next evolution of microservices.

Containers vs. Virtual Machines

Security Differences

- Isolation: VMs provide strong isolation by replicating a full OS instance for each virtual environment, while containers share the host OS kernel, isolating processes through namespaces and control groups (cgroups). This makes containers lighter but potentially exposes them to kernel vulnerabilities.

- Attack Surface: Containers face higher risks from privilege escalation attacks due to shared kernel dependencies, whereas VMs are less vulnerable since each runs a separate OS instance. To mitigate this, container environments prioritize security practices such as image scanning, RBAC, and runtime policies.

Performance Metrics

- Startup Time: Containers start up in seconds (or milliseconds) since they don’t boot an OS, unlike VMs, which can take several seconds to minutes to initialize a full OS. This speed makes containers ideal for applications needing rapid scale-up/down capabilities.

- Resource Utilization: Containers use system resources efficiently, leveraging a shared OS rather than replicating OS instances. This enables higher density, often supporting 3-5x more containers than VMs on the same hardware, making containers optimal for resource-intensive cloud workloads.

- Scalability: Containers scale quickly and efficiently due to low resource overhead and rapid startup. They adapt well to demand fluctuations, especially in orchestrated environments like Kubernetes, while VMs are slower and more costly to scale because of OS dependencies.

In short, containers offer high efficiency, speed, and scalability for distributed applications, though with trade-offs in OS-level isolation compared to VMs.

Final Thoughts on Containerized Microservices

After reading this guide, you should know what containerized microservices are and why containers are a cost-effective alternative to virtual machines. You should also be familiar with the most popular tools for launching and orchestrating containerized microservices.

After launching your containerized microservices, you can use DreamFactory iPaaS (Integration Platform as a Service) to establish data connections. This allows them to work together as an integrated whole. DreamFactory automatically generates REST APIs for any database, so you can securely establish data connections between your apps and microservices in minutes. Sign up today for a free 14-day trial to see for yourself!

Frequently Asked Questions: Containerized Microservices

What are containerized microservices?

Containerized microservices are a software architecture approach that involves breaking down an application into small, independently deployable services. These services are encapsulated within containers, providing isolation and portability.

How do containerized microservices differ from traditional monolithic applications?

Traditional monolithic applications consist of a single codebase and are deployed as a single unit. In contrast, containerized microservices are modular, allowing each service to be developed, deployed, and scaled independently. This approach enables greater agility, scalability, and flexibility.

What are the benefits of using containerized microservices?

Containerized microservices offer numerous benefits, including improved scalability, fault isolation, ease of deployment and management, faster development cycles, and the ability to utilize diverse technologies and programming languages.

What challenges can arise when adopting containerized microservices?

Some common challenges include container orchestration and management, ensuring communication and coordination between microservices, maintaining data consistency, handling security and authentication, and effectively monitoring and debugging the distributed system.

How can container orchestration tools help address the challenges of containerized microservices?

Container orchestration tools like Kubernetes or Docker Swarm help manage and automate the deployment, scaling, and management of containerized microservices. They handle tasks such as service discovery, load balancing, scaling, and monitoring, simplifying the management of a distributed microservices architecture.

What strategies can be employed to ensure communication and coordination between microservices?

Strategies such as synchronous and asynchronous communication, service discovery mechanisms, API gateways, message queues, and event-driven architectures can be utilized to enable effective communication and coordination between microservices.

How can data consistency be maintained in a containerized microservices environment?

Achieving data consistency can be challenging in a distributed system. Techniques like database per service, event sourcing, distributed transactions, or eventual consistency can be employed to address data consistency requirements based on the specific needs of the application.

What security measures should be considered when using containerized microservices?

Implementing secure container images, applying proper access controls, encrypting sensitive data, enforcing authentication and authorization mechanisms, and implementing network security measures are essential for ensuring the security of containerized microservices.

How can the monitoring and debugging of containerized microservices be performed effectively?

Monitoring tools and practices, centralized logging, distributed tracing, and observability techniques can be utilized to gain insights into the performance, availability, and health of containerized microservices. These tools help identify and debug issues in the distributed system.

Are containerized microservices suitable for all types of applications?

Containerized microservices are well-suited for complex and scalable applications, particularly those with varying and evolving requirements. However, the adoption of containerized microservices should be evaluated based on factors such as application complexity, team expertise, infrastructure capabilities, and project requirements.

Fascinated by emerging technologies, Jeremy Hillpot uses his backgrounds in legal writing and technology to provide a unique perspective on a vast array of topics including enterprise technology, SQL, data science, SaaS applications, investment fraud, and the law.

Blog

Blog