DreamFactory 2.7 introduces a new system endpoint - api/v2/system/import that allows you to import data files using a database service of your choice. Currently the feature supports CSV files. Support for XML and JSON is on our roadmap for future releases. This new endpoint is a DreamFactory native endpoint and it is a part of the “system” service. This endpoint is available to use right out of the box without the need for installing additional driver/extensions.

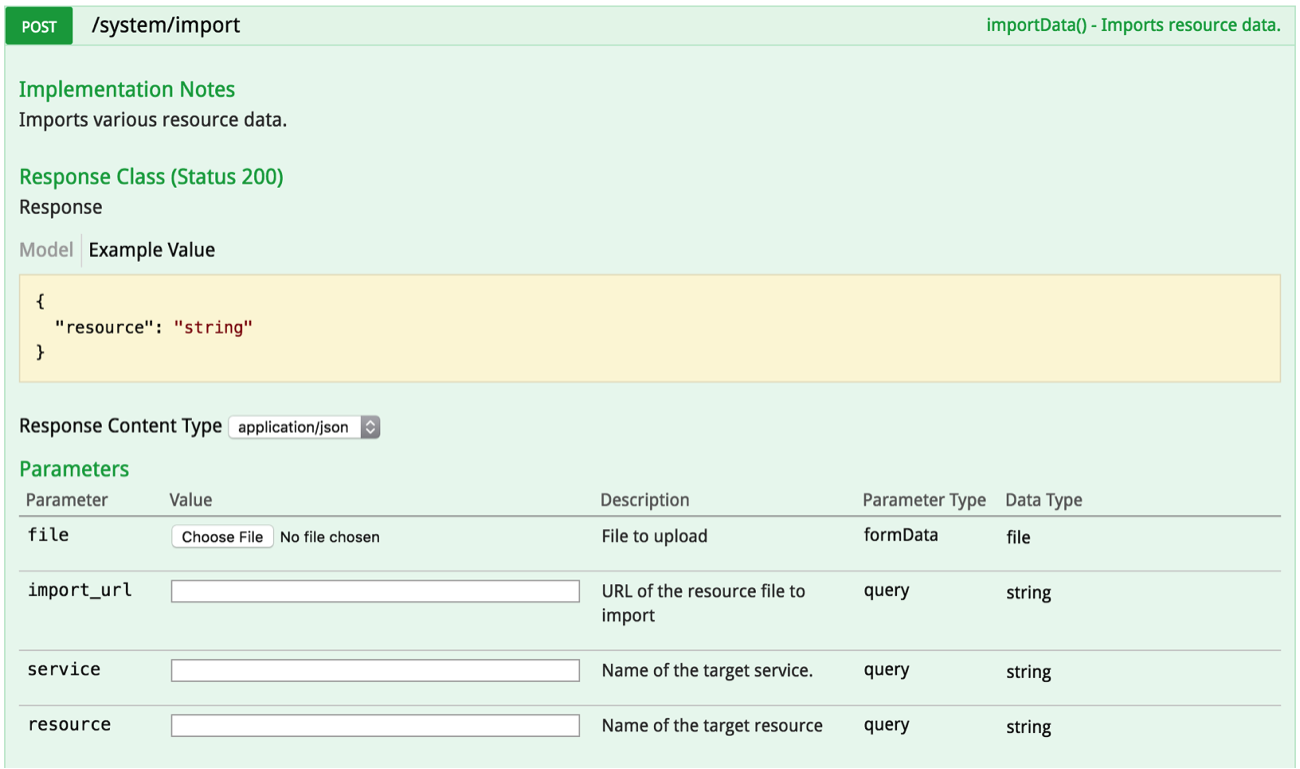

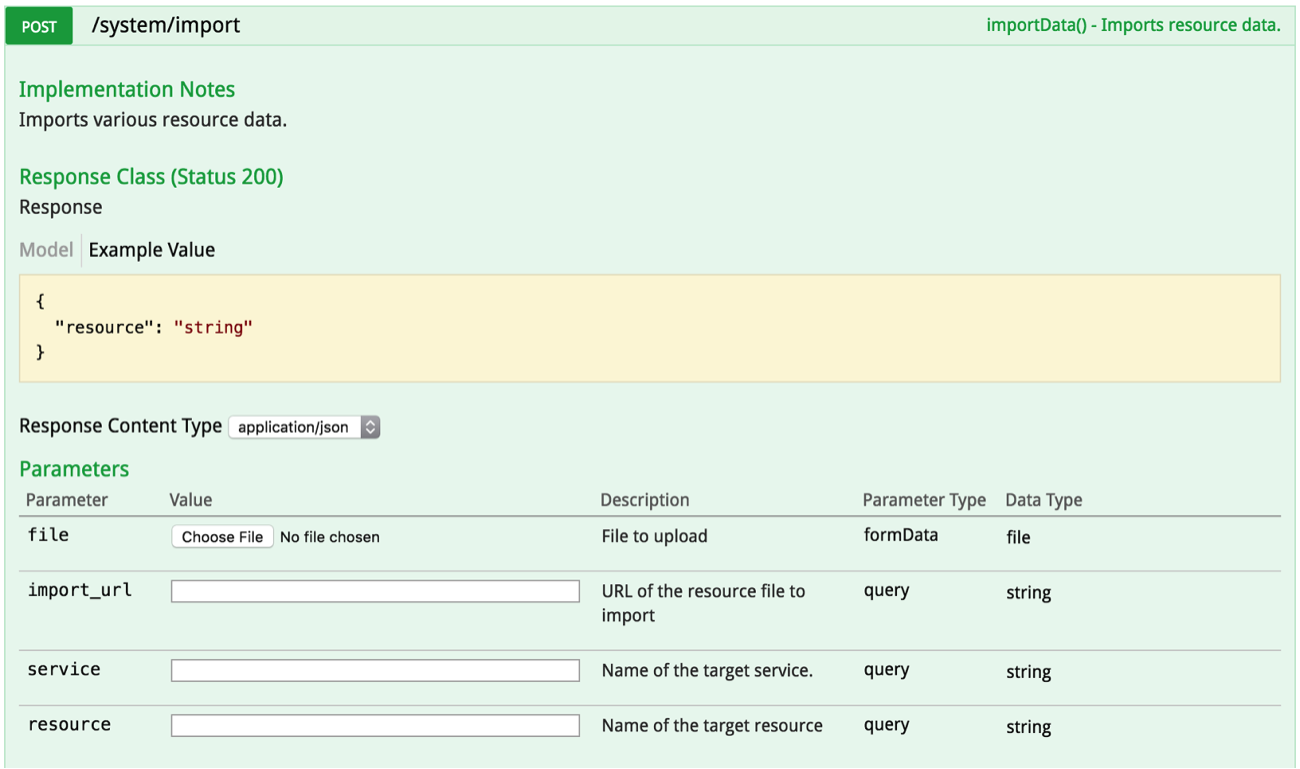

Now let’s dive in and look at this endpoint’s Swagger definition above. This is a very simple, easy to use endpoint. To upload a CSV file, you just need to make a HTTP POST call to the api/v2/system/import endpoint with the options of following parameters.

- file: CSV file to upload. You can upload a file using multipart/form-data. This is not required if you are using ‘import_url’. Otherwise it is required.

- import_url: URL of the CSV file to import. This not required if you are using ‘file’. Otherwise it is required. If both file and import_url is used then import_url will be ignored.

- service: (Optional) Name of the database service to use for this import. Default is ‘db’.

- resource: (Optional) Name of the table where the CSV data will be imported. Default is ‘import_<timestamp>’. NOTE: Importing into an existing table is not currently supported.

Once the data is imported successfully, you will receive a 200 response back from the server with a URL to your imported data. Here is an example response.

{

"resource": "https://example.com/api/v2/db/_table/import_1496940417"

}

Importing a large number of records/rows:

You can import any number of records using this endpoint. However, it imports up to 500 records/rows in a synchronous/blocking call. Anything above 500 records will be pushed into a queue of jobs (500 records each) that will be handled asynchronously. Of course, we designed it this way to prevent a long wait on an import call that tries to insert a large number records and fails eventually.

When importing a CSV file with more than 500 records, you will get the response back from the server right after importing the first 500 records. The rest of the records will be imported in chunks of 500 records per job asynchronously. So, if you try to access your imported data immediately after getting the response back from the server, you will not see all of the data right away. Give it some time and all data will be available eventually.

In order to make sure that the queued jobs are processed properly, a couple of things need to be checked.

- Make sure you are using a database queue. Check your .env file and make sure that QUEUE_DRIVER is set to ‘database’ (QUEUE_DRIVER=database).

- Make sure the application queue worker is running. DreamFactory is built on top of the Laravel PHP framework and therefore utilizes Laravel queuing system. See https://laravel.com/docs/5.4/queues#running-the-queue-worker to learn more about running and maintaining the Laravel queue worker using tools like ‘supervisor’. To run a queue worker you need to run the following command.

php artisan queue:work

In this post, we showed how easy it is to use DreamFactory to import CSV files into a REST-accessible database. Check out the community forum to discuss or let us know what you think in the comments!

Related reading: The Reusable REST API Platform Strategy

Kevin McGahey is an accomplished solutions engineer and product lead with expertise in API generation, microservices, and legacy system modernization, as demonstrated by his successful track record of facilitating the modernization of legacy databases for numerous public sector organizations.

Blog

Blog