DreamFactory and NVIDIA are teaming up to offer 10 NVIDIA DGX Spark systems to enterprises looking to build AI solutions locally. Here's what you get:

- 1 petaflop of AI performance in a compact desktop system.

- Hardware capable of running models with up to 200 billion parameters.

- 40 hours of development support and a pilot implementation completed in 30 days or your money back.

- No need for cloud migration - everything runs on-premises, ensuring data security and compliance.

The DGX Spark, powered by NVIDIA's Grace Blackwell architecture, allows enterprises to run advanced AI models locally while DreamFactory simplifies secure data access by converting databases into REST APIs. This setup ensures AI models can query live enterprise data without compromising security or compliance.

If you're looking for a way to leverage enterprise AI without relying on external cloud providers, this initiative is worth exploring. Jump on a call with an engineer to learn more - DreamFactory.Com/Demo.

DEPLOY Fully Private + Local AI RAG Agents (Step by Step)

What is NVIDIA DGX Spark and Why It Matters for Enterprise AI

NVIDIA DGX Spark Technical Specifications and Performance Benchmarks

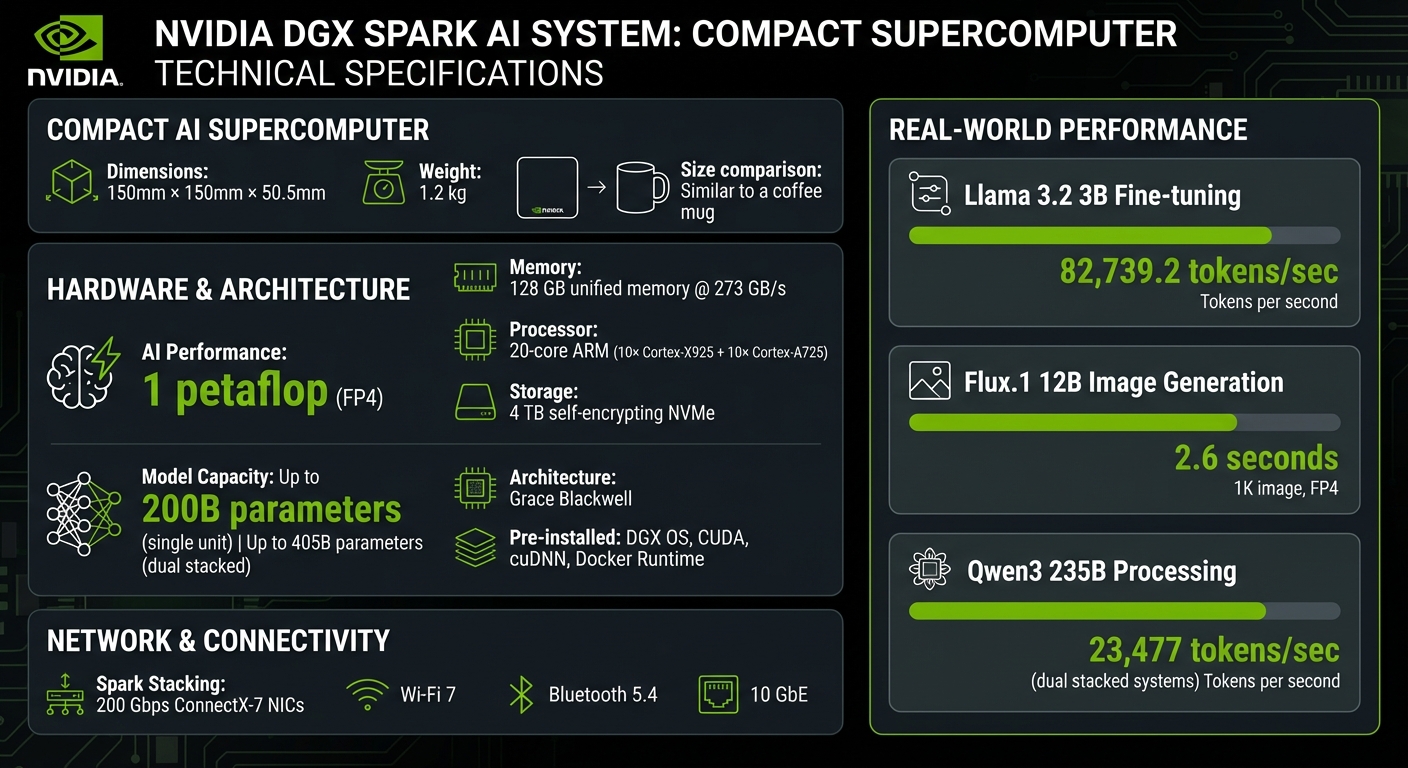

The NVIDIA DGX Spark is a compact AI supercomputer built on the Grace Blackwell architecture. It delivers an impressive 1 petaflop of FP4 AI performance, all packed into a remarkably small system - measuring just 150 mm × 150 mm × 50.5 mm and weighing 1.2 kg. Designed for running large language models locally, it’s a solution tailored for enterprises looking to bring AI capabilities in-house.

With 128 GB of unified memory operating at 273 GB/s, the DGX Spark eliminates the typical bottlenecks between CPUs and GPUs. It can handle models with up to 200 billion parameters on a single unit, and when two systems are connected via ConnectX-7, it supports models with up to 405 billion parameters.

DGX Spark Technical Specifications

This system is powered by a 20-core ARM processor, featuring 10 Cortex-X925 and 10 Cortex-A725 cores, and includes 4 TB of self-encrypting NVMe storage. It comes pre-configured with NVIDIA DGX OS, CUDA, cuDNN, and the NVIDIA Container Runtime for Docker, making it ready for enterprise AI tasks right out of the box.

In terms of performance, the DGX Spark demonstrates its capabilities with impressive benchmarks:

- Fine-tuning the Llama 3.2 3B model at 82,739.2 tokens/sec.

- Generating a 1K image in just 2.6 seconds using the Flux.1 12B model at FP4.

- Achieving 23,477 tokens/sec when processing the Qwen3 235B model on two stacked systems.

"NVIDIA DGX Spark provides an alternative to cloud instances and data-center queues... you can work with large, compute intensive tasks locally, without moving to the cloud or data center."

– Allen Bourgoyne, Director of Product Marketing for Workstation Platforms, NVIDIA

Connectivity options include Spark Stacking via 200 Gbps ConnectX-7 NICs, Wi-Fi 7, Bluetooth 5.4, and 10 GbE, ensuring seamless integration into enterprise networks. These features enable robust performance for demanding AI workflows.

Why Enterprises Need On-Premises AI

The DGX Spark offers a practical solution for enterprises seeking to manage AI workloads securely and efficiently on-premises. Cloud-based APIs often come with challenges like data security risks, unpredictable expenses, and reliance on external providers. By keeping AI processing in-house, the DGX Spark addresses these issues head-on.

For businesses handling sensitive data - like emails, proprietary datasets, or customer records - on-premises hardware simplifies compliance with privacy and regulatory requirements, such as HIPAA, GDPR, and financial regulations. Its built-in 4 TB of self-encrypting storage ensures that data remains secure at rest.

"For organizations that need full control over security, governance and intellectual property, NVIDIA DGX Spark brings petaflop-class AI performance to JetBrains customers."

– Kirill Skrygan, CEO, JetBrains

How DreamFactory Enables Secure On-Premises AI Development

The DGX Spark provides the hardware needed for local AI, but accessing enterprise data securely remains a major challenge. AI models require live data - such as customer records, financial transactions, or inventory details - to function effectively. However, granting them direct database access can lead to security and compliance risks.

DreamFactory solves this issue by acting as a secure bridge between your DGX Spark and enterprise databases. Instead of allowing AI agents to directly access SQL Server, Oracle, or MongoDB, they interact through an API layer managed by DreamFactory. This layer enforces your existing security policies, logs all activities, and ensures data stays within your infrastructure.

"DreamFactory becomes your on‑prem 'data layer' that connects your existing data to any AI, analytics, or front‑end system." – DreamFactory

DreamFactory supports air-gapped, hybrid, and private cloud setups, ensuring that your data remains local. Whether your DGX Spark operates in a fully isolated network or a hybrid environment, the platform enables secure AI access without violating data residency rules.

REST API Abstraction for AI Access

DreamFactory simplifies AI access by converting databases, file stores, and legacy systems into REST APIs. This abstraction removes the need for AI models to understand database schemas or execute raw SQL. Instead, they make API calls through a gateway that adheres to your security policies.

The platform integrates with over 30 data sources, including Snowflake, Databricks, Oracle, SQL Server, MongoDB, S3, SAP, and Salesforce. Each connection is automatically documented with OpenAPI/Swagger specifications, making it easier for AI agents to understand available data and how to request it.

For enterprises using DGX Spark, DreamFactory's Model Context Protocol (MCP) ensures standardized and secure AI access to enterprise data.

"The platform's AI Data Gateway provides enterprise-grade security and governance for exposing backend data to AI tools and agents."

– Kevin McGahey, Product Lead at DreamFactory

This API abstraction is further secured with robust identity management features.

Identity Passthrough and Row-Level Security

A common issue in enterprise AI is the overuse of shared service accounts. When AI systems access databases using generic credentials, it's difficult to trace who accessed what data. DreamFactory addresses this by integrating with your existing authentication systems, such as Active Directory, LDAP, Okta, Auth0, SAML 2.0, and OpenID Connect. This ensures that each AI query is tied to a specific user's identity, enabling row-level security and detailed audit logs.

The platform enforces role-based access control (RBAC), limiting AI agents to specific tables, views, or stored procedures. Additionally, sensitive data - like Social Security numbers or email addresses - can be masked before reaching the AI model.

"The goal isn't to limit what agents can do, but to empower them securely, with the right boundaries in place." – Terence Bennett, CEO, DreamFactory

DreamFactory also ensures compliance with regulations like GDPR, HIPAA, PIPEDA, and CCPA by logging every action and enforcing server-side security policies.

| Feature | AI Governance Benefit |

|---|---|

| RBAC | Limits AI agents to specific data resources |

| Data Masking | Protects sensitive fields before AI processing |

| SSO Integration | Maintains existing security protocols |

| Logging/Audit | Tracks all AI-driven data requests for compliance |

| MCP Support | Standardizes AI interactions with enterprise data |

Air-Gapped and Hybrid Deployment Options

DreamFactory's flexible deployment options cater to industries with strict data residency requirements, such as finance, healthcare, and defense. For fully isolated environments, the platform supports air-gapped setups where both the DGX Spark and enterprise data remain disconnected from external networks.

Hybrid configurations are also supported, allowing some data to stay on-premises while other resources connect to private cloud systems. This flexibility enables organizations to modernize at their own pace without being forced into cloud-first solutions.

With the release of DreamFactory 7.4.1, the AI Data Gateway enforces zero-trust data access policies for large language models (LLMs). This ensures consistent governance whether models are running locally on the DGX Spark or accessing external services.

For operational monitoring, DreamFactory integrates with ELK (Elasticsearch, Logstash, Kibana) and supports Prometheus/Grafana for observability. These tools provide dashboards to track AI activity, security events, and API performance, giving enterprises full control over their production environments.

DreamFactory's 4.7/5 rating on G2 reflects its popularity in industries like finance, manufacturing, healthcare, and the public sector - fields where secure on-premises AI is a necessity.

Enterprise Use Cases for DGX Spark and DreamFactory

Modernizing Legacy Databases for AI

Finance teams often face delays in closing books when outdated Oracle or SQL Server systems limit direct AI access. DreamFactory's "wrap, don't rip" solution tackles this by layering a governed API over these legacy databases. This allows secure, real-time AI queries without the need for costly re-platforming.

"You can modernize on your schedule while AI delivers value now." – DreamFactory

With DGX Spark's local compute capabilities, AI models can instantly query these wrapped databases. This means finance teams can get answers in seconds, while customer support gains immediate insights - all without replicating sensitive data.

For modern data warehouses, the focus remains on ensuring both security and real-time access.

Secure AI Access to Data Warehouses

Modern data warehouses like Snowflake and Databricks hold vast datasets essential for AI analytics. However, direct access to these datasets can introduce compliance challenges. DreamFactory simplifies this by managing connection complexities and implementing unified security protocols. Its Model Context Protocol (MCP) provides a consistent interface for AI assistants, enabling seamless transitions between models without the need to rebuild integrations.

With the release of DreamFactory 7.4.1's AI Data Gateway in January 2025, AI agents running on DGX Spark can query specific tables and columns within data warehouses. Every request is timestamped and logged to meet stringent audit requirements.

AI Development in Regulated Industries

In industries like healthcare, finance, and defense, strict regulatory compliance is non-negotiable. DreamFactory's secure on-premises framework ensures that the entire AI lifecycle - data ingestion, model inference, and output - remains within an organization's local network. When paired with DGX Spark, sensitive information stays securely on-premises, while DreamFactory enforces server-side security policies compliant with GDPR, HIPAA, PIPEDA, and CCPA standards.

For example, a compliance agent can access read-only audit logs, while customer support AI interacts with redacted profiles. This granular control ensures that even if a credential is compromised, entire databases remain protected. Public sector organizations have particularly benefited from this approach, with solutions engineer Kevin McGahey highlighting numerous successful legacy database modernization projects that upheld strict data sovereignty.

Getting Started with DGX Spark and DreamFactory

Step 1: Installation and Initial Setup

The DGX Spark comes pre-loaded with NVIDIA DGX OS, which includes essential tools like CUDA, cuDNN, Docker Engine (CE), and the NVIDIA Container Runtime. You can connect external devices directly or access the system remotely via SSH. During the initial setup, configure the operating system and create a local account.

Since Docker CE is already installed, deploying DreamFactory is quick and easy using the Docker container method, which works seamlessly with DGX OS. To enable remote management, install and activate OpenSSH by running the following commands:

sudo apt install openssh-server

sudo systemctl enable --now ssh

The compact dimensions of the DGX Spark (150mm x 150mm x 50.5mm) make it a perfect fit for any workspace.

Once the basic setup is complete, the next step is to secure your database connections.

Step 2: Configuring Secure Database Connections

Access the DreamFactory dashboard to link your enterprise data sources, such as MySQL, PostgreSQL, SQL Server, MongoDB, Oracle, and others. DreamFactory automatically generates fully documented REST APIs for each connection in just minutes, creating a secure interface between your AI models and databases. To ensure proper security, set up Role-Based Access Control (RBAC) and API keys, which restrict access to only the authorized data rows and tables.

"DreamFactory becomes your on‑prem 'data layer' that connects your existing data to any AI, analytics, or front-end system." – DreamFactory

You can use the device's mDNS hostname (e.g., spark-abcd.local) to connect DreamFactory with other local network resources, eliminating the need for static IP addresses. Additionally, all requests are timestamped and logged to help meet compliance standards.

After securing your data connections, you’re ready to deploy your local AI models.

Step 3: Deploying Local AI Models

Start by installing the Docker Model CLI with this command:

sudo apt-get install docker-model-plugin

Once installed, you can pull and run AI models locally using commands like:

docker model pull ai/qwen3-coder

docker model run ai/qwen3-coder

These models provide OpenAI-compatible endpoints at http://localhost:12434/engines/v1, allowing you to query enterprise data through DreamFactory's REST API.

Next, configure your AI application to use DreamFactory as its data source. Point the AI model to DreamFactory's endpoint for real-time access to enterprise data. To monitor GPU and memory usage during model inference, open the DGX Dashboard at http://localhost:11000.

For remote access from another machine, such as a laptop, set up an SSH tunnel with the following command:

ssh -N -L localhost:12435:localhost:12434 user@dgx-spark.local

With these steps, your DGX Spark and DreamFactory setup will be ready to handle advanced AI workloads efficiently.

Conclusion

The partnership between NVIDIA DGX Spark and DreamFactory tackles one of the biggest hurdles in enterprise AI: secure and efficient data access. With DGX Spark's powerful local computing capabilities, enterprises can run large AI models right on-site, bypassing the delays of cloud latency or data-center queues. Meanwhile, DreamFactory enhances security by wrapping databases with a REST API layer that enforces role-based access control, data masking, and comprehensive audit logging. This ensures AI agents only access the data they’re authorized to use.

This combination breathes new life into legacy systems, turning them into flexible and compliant AI platforms. Data remains securely on-premises, while compliance teams gain peace of mind with detailed, timestamped logs that track exactly which AI agent accessed specific data and when. Plus, swapping AI models - whether it’s OpenAI, Claude, or Llama - becomes seamless, eliminating the need to rebuild data integrations from scratch.

Companies leveraging this approach have launched their first AI use cases in just days, rather than waiting months. It’s a way to modernize backend systems at your own pace while maintaining full control over data.

DreamFactory is offering an incredible opportunity: 10 DGX Spark units, 40 hours of development support, and a commitment to fully implement a pilot project within 30 days. If you're ready to embrace local AI while safeguarding your data and ensuring compliance, visit dreamfactory.com to learn more and join the initiative.

FAQs

What are the advantages of using DGX Spark for on-premises AI development?

Using DGX Spark for on-premises AI development brings several standout benefits. By running AI inference and model development on local hardware, it removes the need for cloud APIs. This means lower latency, reduced costs, and the ability to deliver real-time performance - a critical advantage for many enterprise applications.

With DGX Spark, teams can prototype, train, and fine-tune massive AI models - up to 200 billion parameters - right on their own systems. This local setup provides complete control over the development process, allowing for greater flexibility and customization.

Another key benefit is data security. Sensitive data remains within your enterprise environment, which not only enhances privacy but also supports compliance with regulations. DGX Spark enables secure, governed access to a wide range of data sources, including SQL databases, legacy systems, and cloud applications. This makes it easier to scale AI projects without compromising security or governance.

By combining high-performance hardware with secure data management, DGX Spark helps accelerate project timelines and cut costs - making it a powerful tool for enterprise AI workflows.

How does DreamFactory keep enterprise data secure when connecting AI models?

DreamFactory strengthens enterprise data security by serving as a governed data layer, seamlessly linking AI models to existing databases without the need for data migration or duplication. This approach reduces exposure and ensures sensitive information stays under your control.

To bolster security further, DreamFactory incorporates strong authentication protocols such as OAuth, LDAP, Active Directory, and SAML 2.0. These measures ensure that only authorized users and systems can access your data. By combining secure API management with strict access controls, DreamFactory enables organizations to integrate AI solutions confidently while safeguarding data integrity and adhering to compliance requirements.

Which types of businesses will benefit the most from the DGX Spark and DreamFactory collaboration?

Businesses that stand to gain the most from the collaboration between DGX Spark and DreamFactory are those dealing with sensitive, complex, and distributed data while striving to adopt high-performance, local AI solutions. Key sectors include finance, healthcare, manufacturing, and government - industries where data security, governance, and low-latency AI processing are non-negotiable.

The DGX Spark delivers robust on-premises AI capabilities, making it possible to perform tasks like real-time inference and model fine-tuning without depending on cloud APIs. When combined with DreamFactory's advanced tools for data connection and governance, businesses can simplify workflows, improve data accessibility, and deploy AI solutions securely and effectively. This partnership is particularly beneficial for organizations managing regulated data or requiring fast, dependable AI responses while retaining full control over their data systems.

Kevin Hood is an accomplished solutions engineer specializing in data analytics and AI, enterprise data governance, data integration, and API-led initiatives.

Blog

Blog