When integrating Snowflake, you have four main options to choose from, each suited to specific needs:

- DreamFactory: Automates secure REST API creation for fast, scalable data integration.

- Snowflake Data Sharing: Enables real-time, secure data sharing directly within Snowflake.

- Snowflake APIs (REST API & Snowpark): Offers custom application development and advanced data science workflows.

- ETL with Custom APIs: Provides full control for complex, legacy system integrations but requires significant resources.

Quick Comparison

|

Method |

Best For |

Pros |

Cons |

|---|---|---|---|

|

DreamFactory |

Rapid API deployment |

Automated setup, built-in security |

Licensing fees, learning curve |

|

Data Sharing |

Snowflake-to-Snowflake sharing |

Real-time, no maintenance |

Snowflake-only, no external apps |

|

Custom applications |

Direct control, platform integration |

Complex implementation, dev expertise |

|

|

Snowpark |

Data science workflows |

Optimized for ML/AI, Python support |

Requires Python/data science skills |

|

ETL + Custom APIs |

Complex transformations |

Full customization, legacy support |

High cost, longer timelines |

Each method has trade-offs. Choose based on your team's expertise, integration goals, and resource availability. For quick implementation, DreamFactory or Data Sharing are ideal. For advanced workflows, explore Snowflake’s APIs or ETL solutions.

Related video from YouTube

DreamFactory: Automating API Integration with Snowflake

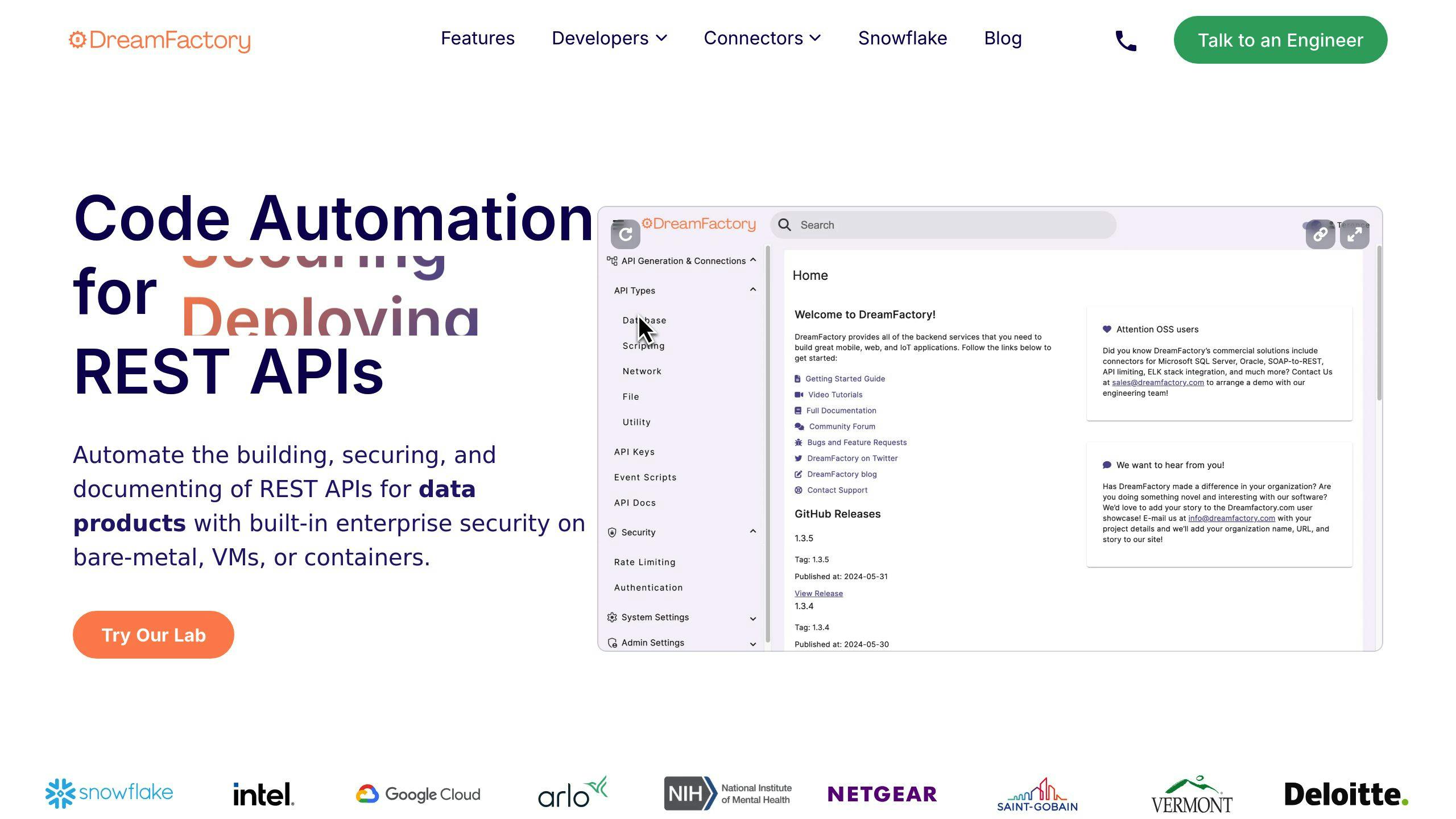

DreamFactory simplifies how businesses work with Snowflake by automating the creation of secure REST APIs. As a Snowflake Select Partner, it offers enterprise-level API automation tools tailored for the Data Cloud.

Key Features

- Instant API Creation: Automatically generates REST APIs for Snowflake tables, views, and stored procedures.

- Enhanced Security: Includes authentication with role-based access control (RBAC) and OAuth.

- Live Data Access: Handles complex queries and real-time data execution.

- Request Caching: Cuts down on Snowflake compute costs by reducing direct database queries.

- Cross-System Integration: Easily connects with a variety of front-end and back-end platforms.

Pros and Cons

|

Pros |

Cons |

|---|---|

|

Quick API setup |

Requires time to learn the platform |

|

Built-in security features |

Licensing fees can be a factor |

|

Flexible with custom scripting |

Initial configuration can take time |

|

Simplifies third-party integration |

Ongoing maintenance is necessary |

Best Use Cases

DreamFactory's integration with Snowflake is especially useful for situations where speed and scalability are top priorities:

- Enterprise Data Connections: Perfect for businesses needing to link multiple apps to Snowflake data.

- Modernizing APIs: Ideal for updating outdated systems with REST APIs.

- Fast Development: Great for teams needing to deploy APIs quickly.

- High-Security Applications: Suitable for projects requiring strict data protection and compliance.

"By bringing DreamFactory's API generation capabilities to the Data Cloud, we're making it easier for our customers to overcome barriers to innovation and accelerate their digital transformation journey" [2].

DreamFactory also supports customization through multiple scripting languages while preserving the benefits of automation. Its compliance features make it a strong option for industries with strict regulations.

Although DreamFactory is a standout for API automation, Snowflake-native solutions like Data Sharing offer other ways to enable smooth collaboration.

Snowflake Data Sharing: Direct Data Access Within Snowflake

Snowflake's Data Sharing feature offers a straightforward way for organizations operating entirely within its ecosystem to share data securely. This built-in functionality removes the need for extra infrastructure, making it a seamless option for Snowflake users.

Main Features

With Snowflake Data Sharing, users get real-time, secure access to data without creating duplicates, which helps cut down on storage costs. Its governance tools include role-based access and audit trails, making it especially appealing to industries like finance and healthcare that require strict compliance.

Pros and Cons

|

Advantages |

Disadvantages |

|---|---|

|

No maintenance required |

Only works within Snowflake's ecosystem |

|

Real-time access to data |

All participants must have Snowflake accounts |

|

Integrated security and governance |

Lacks support for external application integration |

|

Reduces costs by avoiding data duplication |

Limited transformation options |

|

Easy to manage |

Unsuitable for complex data workflows |

Ideal Use Cases

Snowflake Data Sharing shines in scenarios where organizations need secure and direct data access within the Snowflake platform:

- Internal Data Distribution: Share data securely across departments without making copies.

- Partner Collaboration: Simplifies data sharing when both parties are on Snowflake, removing the need for complicated pipelines.

- Regulatory Compliance: Features like role-based access and audit trails help meet compliance standards in tightly regulated sectors.

- Although perfect for collaboration within Snowflake, other methods like APIs may be better for integrations outside the platform.

Snowflake APIs: Comparing REST API and Snowpark

Snowflake offers two powerful APIs, each designed to address specific technical and business requirements: the REST API and Snowpark.

Main Features

The REST API provides precise control over Snowflake operations, making it a great choice for building custom, enterprise-level applications.

On the other hand, Snowpark integrates directly with Snowflake's processing and storage capabilities, making it perfect for advanced data transformations, data science workflows, and machine learning tasks.

Pros and Cons

|

Feature |

Snowflake REST API |

Snowpark (Python API) |

|---|---|---|

|

Use Cases |

Custom applications, enterprise integration |

Data science and analytics workflows |

|

Ease of Use |

High complexity for custom development |

Challenging for non-Python users |

|

Performance |

Standard API performance |

Optimized for data processing |

|

Resource Needs |

Requires a dedicated development team |

Requires data science expertise |

Ideal Use Cases

The REST API is best suited for:

- Custom applications requiring detailed control over Snowflake

- Integration with existing enterprise systems

- Teams experienced in API development

- Scenarios needing broad platform compatibility

Snowpark, however, is ideal for:

- Data science and analytics tasks

- Deploying machine learning models

- Handling complex data transformations

- Python-based data workflows

For instance, Capital One successfully used Snowpark to embed machine learning models into their data pipeline. Meanwhile, their customer-facing applications leveraged the REST API for real-time data access.

"The choice between Snowflake's REST API and Snowpark often depends on the specific requirements of the project. For example, data science teams may benefit from Snowpark's advanced data processing capabilities, while custom application developers may prefer the flexibility of Snowflake's REST API."

Both APIs are designed to complement Snowflake's broader ecosystem. The REST API focuses on general-purpose integrations, while Snowpark is tailored for data science. Depending on your organization's needs, these APIs can also work alongside other tools like DreamFactory or ETL workflows to enhance integration capabilities.

ETL and Custom APIs: Tailored Integration for Snowflake

Main Features

Combining ETL processes with custom-built APIs gives businesses full control over how they integrate data with Snowflake.

The ETL process handles tasks like extracting, transforming, and cleaning data. Meanwhile, the custom API layer offers:

- Fine-tuned control over how data is accessed

- Implementation of specific business rules

- Security protocols tailored to unique requirements

- Compatibility with enterprise systems

Pros and Cons

|

Aspect |

Advantages |

Disadvantages |

|---|---|---|

|

Customization |

Can be tailored to meet specific needs |

High development costs |

|

Integration |

Works well with legacy systems |

Longer deployment timelines |

|

Maintenance |

Full control of the codebase |

Requires a dedicated development team |

|

Scalability |

Designed for specific workloads |

Potential for development bottlenecks |

Ideal Use Cases

This integration method is ideal for companies dealing with complex legacy systems or unique data processing needs. It's especially valuable in industries like finance and healthcare, where strict compliance and intricate transformations are critical.

Before adopting this approach, organizations should evaluate:

- Their technical expertise and in-house capabilities

- The resources needed for ongoing maintenance

- How they’ll manage data governance and security

To strike a balance, businesses can use modern ETL tools like Apache NiFi, Talend, or Informatica alongside custom API development. This approach simplifies development while keeping the flexibility needed for specialized integration tasks [1][2].

While tools like DreamFactory offer automation and faster deployment, building custom APIs paired with ETL processes provides unmatched flexibility. However, this method involves higher development effort and costs, making automated or native solutions better suited for quicker implementations.

Comparison of Snowflake Integration Methods

Choosing the right integration method depends on understanding how each option stacks up across critical factors. The table below breaks down the key strengths, limitations, and requirements to help you decide which method fits your needs.

|

Integration Method |

Key Strengths |

Primary Limitations |

Best For |

Technical Requirements |

|---|---|---|---|---|

|

DreamFactory |

• Automated API generation |

• Platform learning curve |

• Rapid API deployment |

• Basic REST API knowledge |

|

Snowflake Data Sharing |

• Zero maintenance |

• Snowflake-only |

• Inter-organizational sharing |

• Snowflake account |

|

Snowflake REST API |

• Direct platform access |

• Complex implementation |

• Custom applications |

• REST API expertise |

|

Snowpark (Python API) |

• Advanced processing |

• Python expertise needed |

• Data science workflows |

• Python skills |

|

ETL with Custom APIs |

• Full customization |

• High costs |

• Complex transformations |

•Development team |

Cost Considerations

Compute costs are a major factor, especially for frequent queries. DreamFactory helps manage these costs by using caching to reduce direct queries to Snowflake, making it a more cost-efficient option compared to direct API integrations.

Security Implementation

Each method offers distinct security features tailored to different needs:

- DreamFactory combines authentication with access control for robust security.

- Snowflake Data Sharing provides built-in governance tools for seamless data sharing.

- Custom solutions require manual security setups, which can be time-consuming.

Development Effort

The level of development effort required varies significantly:

- DreamFactory and Snowflake Data Sharing are easier to implement, offering faster setup times.

- Snowflake REST API and Snowpark require skilled development teams but are less demanding than custom ETL solutions.

- Custom ETL solutions involve the most effort, needing significant time and resources to implement.

Conclusion: Selecting the Best Integration Option

Picking the right Snowflake integration method depends on your organization's specific needs and resources. Each method comes with its own strengths and trade-offs, as shown in the comparison table.

Technical Resources and Expertise

Your team's skills play a big role in the decision. If your team lacks development resources, DreamFactory simplifies the process by automating API creation. On the other hand, teams with strong technical expertise might prefer using Snowflake’s native REST API or Snowpark for more control over their integration setup.

Cost and Performance Considerations

Balancing costs with performance is key to ensuring long-term success. Along with costs, you’ll need to consider how complex your integration is and how quickly it needs to be completed.

Integration Needs and Timeline

The nature of your project will guide your choice:

- Quick Setup: For fast implementation, tools like DreamFactory or Snowflake Data Sharing are ideal.

- Complex Projects: If your integration involves detailed data transformations or working with legacy systems, traditional ETL paired with custom APIs may be required, even if it’s more resource-intensive.

- Real-Time Access: For immediate data availability, Snowflake Data Sharing is the fastest route, though it’s limited to Snowflake-to-Snowflake scenarios.

Security and Compliance

Security is often a deciding factor, especially in regulated industries. DreamFactory offers features like role-based access and API key authentication for secure integrations. If internal data sharing is your focus, Snowflake’s native sharing tools provide strong governance without extra security layers.

Future Scalability

Think about your organization’s growth. Snowflake’s native APIs and Snowpark are excellent choices for building scalable workflows in areas like data science and machine learning.

Practical Selection Framework

When making your decision, focus on these priorities:

- Your team’s technical skills and current integration goals

- Cost and performance requirements

- Security and compliance needs

You can start with simpler options like DreamFactory or Data Sharing and move to more complex solutions as your needs evolve.

FAQs

What is the difference between API and ETL integration?

APIs are designed for real-time data exchange and direct interactions between applications, making them perfect for live dashboards or mobile apps. On the other hand, ETL processes are built for large-scale data tasks, such as merging data from multiple sources, running batch jobs, or consolidating historical data.

Tools like DreamFactory help reduce costs by caching API requests for real-time access, while ETL workflows are excellent for handling complex data transformations efficiently.

When to use API vs ETL?

Choosing between API and ETL depends on your specific needs and objectives:

|

Integration Method |

Best For |

Example Scenarios |

|---|---|---|

|

API Integration |

• Real-time data exchange |

• Customer-facing apps |

|

ETL Integration |

• Batch data processing |

• Financial reports |

Here are some factors to help you decide:

- Data Volume: APIs work well for smaller, frequent requests. ETL is better for managing large datasets.

- Processing Needs: Use ETL for advanced data transformations, while APIs handle straightforward data access.

- Time Sensitivity: If real-time data is crucial, go with APIs. For scheduled or periodic updates, ETL is the way to go.

- Team Resources: Consider your team's technical skills and the resources available for development.

Knowing when to use APIs or ETL is essential for optimizing Snowflake integrations, as each serves different purposes in meeting business goals.

Terence Bennett, CEO of DreamFactory, has a wealth of experience in government IT systems and Google Cloud. His impressive background includes being a former U.S. Navy Intelligence Officer and a former member of Google's Red Team. Prior to becoming CEO, he served as COO at DreamFactory Software.

Blog

Blog