In the world of APIs and microservices, choosing the right tools for data compression and serialization can drastically improve performance, reduce bandwidth costs, and streamline communication. Here's a quick breakdown of five popular tools and their strengths:

- DreamFactory: Simplifies API creation with automated JSON serialization, strong security features, and support for over 20 databases.

- gRPC with Protocol Buffers: Offers high-speed binary serialization, smaller payloads, and built-in compression, ideal for real-time systems.

- Apache Avro: Combines compact binary serialization with schema evolution, perfect for big data pipelines.

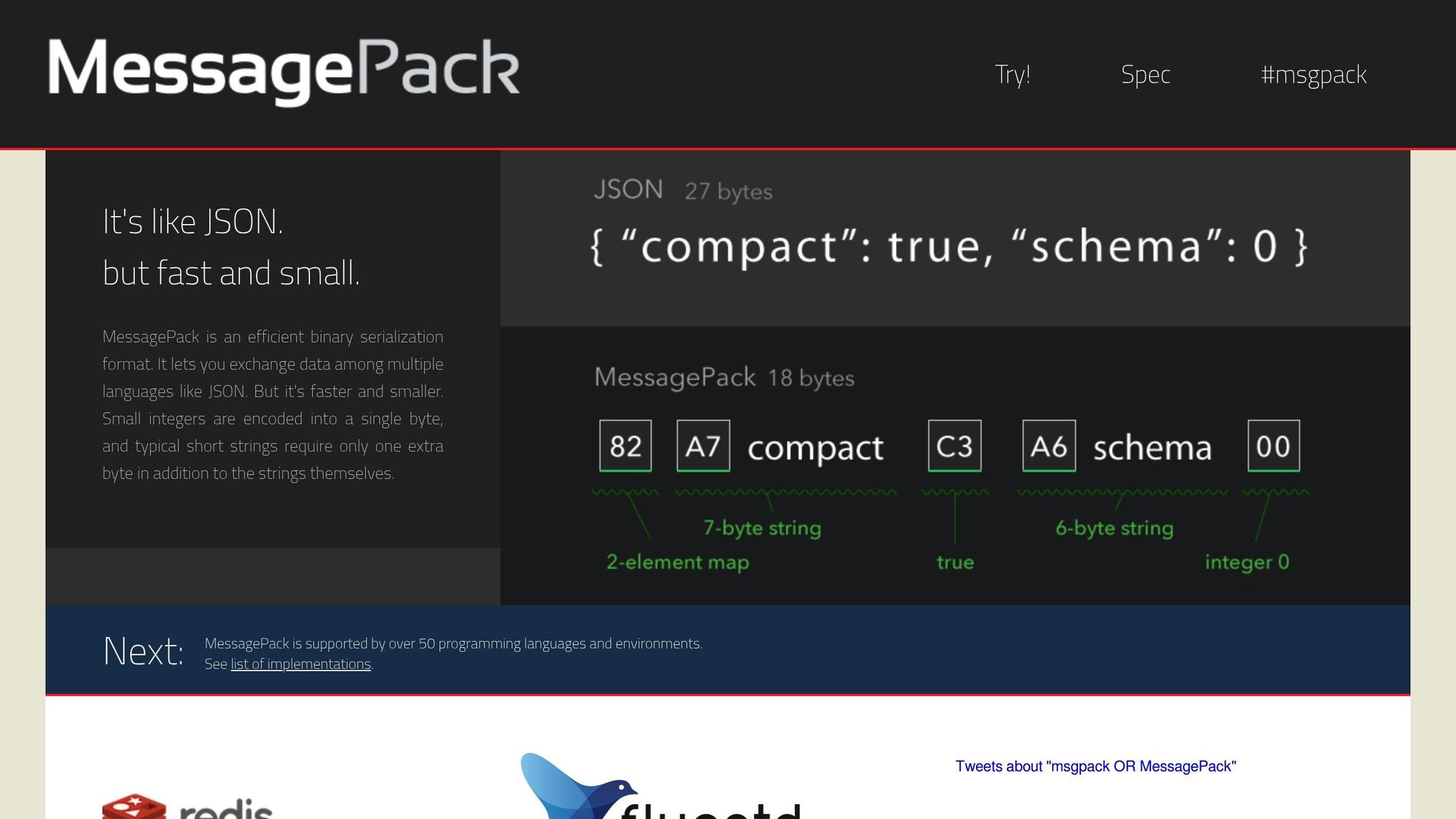

- MessagePack: Provides fast, lightweight binary serialization, great for resource-constrained environments.

- JSON with GZIP/Brotli Compression: Widely supported, easy to debug, and enhanced with compression for smaller payloads.

Each option caters to different needs, from rapid deployment to high performance or compatibility. Below is a quick comparison of their key metrics:

| Tool | Serialization Speed | Compression Ratio | Integration Complexity | Security Features | Best Use Case |

|---|---|---|---|---|---|

| DreamFactory | Medium | Depends on format | Low | RBAC, API keys, OAuth | Fast database API creation |

| gRPC + Protobuf | Fast | High | Medium | TLS, OAuth, API keys | Real-time microservices |

| Apache Avro | Moderate | High | High | Schema validation | Big data pipelines |

| MessagePack | Fast | Medium | Medium | External implementation | Performance-critical APIs |

| JSON + GZIP/Brotli | Slow | High | Low | HTTPS, OAuth | Public APIs, web services |

Want speed? Choose gRPC with Protocol Buffers. Need quick deployment? Go with DreamFactory. For big data, Apache Avro excels. When compatibility matters most, JSON with compression is the go-to. Test these tools with your data to find the best fit for your needs.

REST API Optimization: How to Enable Compression Easily

1. DreamFactory

DreamFactory is a Data AI Gateway platform that simplifies the creation of secure REST APIs from databases. It combines automation with built-in management and security features to streamline API development.

Serialization Format

DreamFactory relies on JSON serialization for its auto-generated REST APIs. It uses schema mapping to handle data type conversions, ensuring consistent JSON responses across its 20+ supported connectors, including Snowflake, SQL Server, and MongoDB.

Instead of writing custom serialization logic for each database type, DreamFactory automates the process. For example, it can convert SQL Server date/time fields or MongoDB timestamps into standardized JSON formats. This automation not only simplifies the process but also accelerates deployment - a key advantage discussed in the next section.

Performance

While JSON serialization isn’t as fast as binary formats, DreamFactory prioritizes speed in deployment by reducing development overhead. The platform can generate REST APIs in minutes, significantly cutting down the time needed to create data-driven applications.

The real performance gain lies in development efficiency. Instead of dedicating weeks to crafting custom APIs with optimized serialization, teams can deploy fully functional APIs almost immediately. Although this approach may not achieve microsecond-level optimizations, it balances speed, security, and overall efficiency to meet broader API management needs.

Integration and Ecosystem

DreamFactory supports seamless integration across various environments, thanks to its compatibility with more than 20 database connectors and its flexible deployment options, including cloud, on-premises, and hybrid setups. It also auto-generates Swagger API documentation and enables server-side scripting using popular scripting languages.

This scripting capability allows developers to introduce custom functionality. For instance, while DreamFactory doesn’t natively support serialization formats like MessagePack or specific compression methods, developers can implement such features through server-side scripts. However, this might add some extra workload compared to having native support.

Security Features

DreamFactory includes robust security measures to safeguard data during serialization and transmission. These features include Role-Based Access Control (RBAC) for fine-grained permissions, API key management for authentication, and OAuth support for secure authorization.

"Every AI action is bounded by your policies and leaves a trail. Visibility without another shadow system."

The platform ensures secure, on-premises access to live data without requiring data duplication, minimizing security risks and maintaining compliance audit trails. Governance policies are consistently enforced with every request, ensuring that security rules are applied across all API interactions.

For businesses handling sensitive information, these security features are especially critical. DreamFactory supports GDPR and HIPAA compliance, making it a strong choice for industries where data protection is a top priority, even if it means sacrificing some serialization speed.

2. gRPC with Protocol Buffers

DreamFactory's streamlined API generation takes a leap forward with gRPC and Protocol Buffers, delivering impressive speed and efficiency tailored for microservices. As an open-source, high-performance RPC framework from Google, gRPC leverages Protocol Buffers (protobuf) for serialization. This combination is a perfect match for microservices and real-time applications, making gRPC a standout choice for systems that demand speed and low latency.

Serialization Format

Protocol Buffers utilize a binary encoding system that prioritizes efficiency. Data structures are defined in .proto files and compiled into code compatible with multiple programming languages. This ensures strong typing and supports schema evolution. While not human-readable, the binary format dramatically reduces both payload size and parsing time - an essential edge when dealing with large volumes of structured data.

Compression Support

gRPC comes equipped with built-in compression options to optimize API payloads. It supports algorithms like gzip and, in some cases, Brotli. Compression can be applied globally at the channel level or selectively for specific calls, giving developers precise control over bandwidth usage. Gzip is widely supported and compresses quickly, while Brotli achieves higher compression rates, albeit with greater processing demands. These features help minimize payload sizes and improve overall system performance.

Performance

When it comes to raw performance, Protocol Buffers leave JSON far behind. Benchmarks consistently show that binary serialization with Protocol Buffers is faster and produces smaller payloads. In real-world applications - like recommendation engines or genomic data analysis - switching to Protocol Buffers has resulted in up to 500× faster processing. This speed boost is a game-changer for high-throughput environments.

Integration and Ecosystem

gRPC with Protocol Buffers is designed to work seamlessly across a wide array of programming languages, including Python, Java, Go, and C#. It offers robust tools for code generation, service discovery, and load balancing, making it easy to integrate into modern microservices and cloud-native setups. Built on the HTTP/2 transport layer, it supports advanced features like multiplexing and bidirectional streaming, giving developers greater flexibility in API communication. These capabilities make gRPC a go-to solution for handling modern API challenges.

Security Features

Beyond its speed and efficiency, gRPC also prioritizes security. It uses transport layer security (TLS) to encrypt communication and supports authentication methods such as OAuth and JWT. Access control policies ensure that only authorized clients can interact with API endpoints, making gRPC a dependable option for enterprise-grade applications where security is non-negotiable.

3. Apache Avro

Apache Avro is a serialization framework designed to handle big data efficiently, with a strong focus on schema evolution and seamless integration with Hadoop-based tools. Created by the Apache Software Foundation, it’s particularly suited for data-heavy applications that require long-term compatibility and adaptable data management.

Serialization Format

Avro uses JSON to define schemas and a compact binary format for data serialization. This combination provides the benefit of human-readable schemas while ensuring efficient storage. One key feature is that Avro always includes the schema with the serialized data. While this adds a small amount of overhead, it ensures compatibility and simplifies data handling across different systems.

The binary format delivers impressive compactness, often significantly reducing data size compared to JSON, depending on the specific use case. This balance between readability and storage efficiency is one of Avro's standout features.

Compression Support

Avro includes built-in compression capabilities to reduce data payloads, which helps conserve bandwidth and improves transfer efficiency. Unlike JSON, which often relies on external tools like GZIP or Brotli for compression, Avro streamlines the process by integrating compression directly into its framework.

This makes Avro particularly effective for streaming platforms like Apache Kafka, where reducing data size can directly enhance performance and lower operational costs. By eliminating the need for external compression layers, Avro simplifies API architecture while improving overall efficiency.

Performance

While Avro’s serialization speed isn’t the fastest among binary formats, its ability to shrink data size and support real-time processing makes it highly scalable for big data environments.

Its strengths shine in scenarios requiring real-time data management, making it a go-to choice for platforms focused on streaming and analytics.

Integration and Ecosystem

What sets Avro apart is its deep integration with Apache Hadoop and compatibility with other big data tools like Apache Kafka and Apache Spark. Its language-agnostic design allows for seamless code generation in languages like Java, Python, and C++, making it versatile for diverse development environments.

Avro’s schema evolution capabilities are another major advantage. They allow you to add, remove, or modify fields without breaking compatibility with existing data. This flexibility is invaluable for enterprises that need to adapt their data structures over time without undergoing costly migrations, making Avro an excellent fit for evolving big data pipelines.

Security Features

Avro doesn’t include built-in encryption or authentication, so securing its deployment environment is essential. Typically, it relies on being implemented within secure infrastructures like Hadoop clusters, where encryption and access controls are managed at the system level.

When incorporating Avro into your architecture, it’s critical to establish robust data handling practices and ensure the surrounding ecosystem is secure. This means that security becomes an integral part of the overall system design rather than being handled by Avro itself.

4. MessagePack

Moving on from Apache Avro, let's explore MessagePack - a binary serialization format that stands out for its speed and compactness. Unlike formats requiring predefined schemas, MessagePack adapts seamlessly to evolving data structures, making it a flexible option for dynamic applications.

Serialization Format

MessagePack uses a compact binary encoding method that significantly reduces payload sizes compared to text-based formats. For example, a typical JSON document of 3,005 bytes compresses to about 2,455 bytes when serialized with MessagePack - a reduction of roughly 15%. Its schemaless nature means developers don’t need to define data structures beforehand, which is especially useful in fast-changing environments. While its binary format isn’t human-readable like JSON, this trade-off brings notable performance improvements. It efficiently handles common data types such as arrays, maps, integers, floats, and strings.

Compression Support

MessagePack doesn’t include built-in compression, but it integrates well with external tools like LZ4 or Zstd for further size reduction. This layered approach allows developers to choose the compression method that best aligns with their performance needs and infrastructure capabilities.

Performance

When it comes to speed, MessagePack outpaces JSON, encoding and decoding data 2–4 times faster while reducing payload sizes by 30–50%. In a 2023 benchmark, MessagePack serialized a 1MB data structure in just 5–7 milliseconds, compared to JSON’s 15–20 milliseconds. This level of efficiency makes it a strong choice for high-throughput API services.

Integration and Ecosystem

MessagePack is supported across a wide range of programming languages, including Python, Java, Ruby, JavaScript, Go, and C++. This broad compatibility ensures seamless integration in multi-language systems. Platforms like DreamFactory further enhance its utility by supporting MessagePack through server-side scripting and custom connectors. This makes it particularly effective for REST APIs where performance and reduced payload size are key priorities.

Security Features

MessagePack doesn’t offer built-in encryption or authentication, so developers must implement security at the application or transport layer. While its binary format does make casual data inspection more difficult, it’s not a substitute for proper security measures. To minimize risks, developers should validate inputs and rely on secure libraries to guard against deserialization attacks.

5. JSON with GZIP/Brotli Compression

JSON with compression has become a go-to choice for API transmission. While it may not be the fastest option for raw serialization, its readability and near-universal compatibility make it a favorite for web APIs.

Serialization Format

JSON is a plain-text format that's easy for humans to read and widely supported across programming languages. However, it comes with a trade-off: it serializes data at about 7,045 ns/op, which is slower compared to binary formats. Plus, its verbose structure means larger payloads before compression. Despite these drawbacks, JSON remains popular because it’s simple to use and integrates well into existing workflows. This balance between ease of use and performance highlights why compression is so important when using JSON in API communication.

Compression Support

Using compression can shrink JSON payloads significantly - reducing them by 60–80% with GZIP and 70–90% with Brotli. GZIP is almost universally supported by web servers and browsers, making it a reliable choice. Brotli, on the other hand, delivers better compression rates but demands more CPU power and isn’t quite as widely supported. Deciding between the two often comes down to factors like payload size, server capacity, and network traffic.

Performance

Even though JSON’s serialization speed trails behind binary formats, compression helps offset this by cutting down payload sizes. This reduction can lead to faster data transfers, especially over slower connections. For many businesses, the benefits of quicker debugging and streamlined development with JSON often outweigh its slower runtime performance.

Integration and Ecosystem

JSON’s widespread adoption makes it a natural fit for API workflows. It integrates seamlessly with compression methods, and over 90% of public APIs rely on JSON with GZIP compression by default. Modern development frameworks also provide built-in support for JSON, making it an appealing option for teams focused on rapid development and broad compatibility. For example, DreamFactory includes built-in compression options for handling JSON in REST APIs, further simplifying its use.

Security Features

Security for JSON primarily relies on HTTPS/TLS protocols to ensure data is encrypted during transmission. Its text-based format also makes it easier to validate and sanitize inputs, which helps protect against malformed or malicious payloads. Developers should always secure JSON API traffic with HTTPS to prevent potential interception or tampering. This dual benefit of simplicity and security makes JSON a reliable choice for safe data exchange.

Pros and Cons Analysis

This section breaks down the key strengths and trade-offs of each tool to help you make an informed choice. Each option has its own set of advantages, tailored to specific use cases.

DreamFactory stands out in enterprise settings where quick deployment matters. It allows instant API generation from databases, supporting over 20 connectors like Snowflake, SQL Server, and MongoDB. With built-in security features - such as RBAC, API key management, and OAuth - it simplifies API creation. However, its performance can depend on the compression and serialization formats you choose.

gRPC with Protocol Buffers is all about speed. Its binary format is 4–6 times faster than JSON and reduces message sizes by up to 34%. Features like HTTP/2 multiplexing and bidirectional streaming make it a great fit for real-time applications. That said, its binary nature can make it less human-readable and may pose a challenge for developers accustomed to REST.

Apache Avro is ideal for big data workflows where schema evolution is a priority. Its compact binary format integrates seamlessly with tools like Hadoop, Kafka, Spark, and Flink, and it supports backward and forward compatibility through versioned schemas. While it may lag behind other binary formats in serialization speed, its compactness often offsets this in bandwidth-sensitive scenarios.

MessagePack strikes a balance between JSON’s simplicity and binary efficiency. It outperforms JSON in speed while maintaining flexibility, making it a strong choice for performance-driven applications. However, it lacks the extensive tooling and documentation that JSON offers, which might require extra effort during implementation.

JSON with GZIP/Brotli Compression remains a go-to option due to its accessibility and widespread support across various languages and frameworks. Compression can reduce JSON payloads significantly - GZIP by 60–80% and Brotli by 70–90%. Its readability aids debugging, but it’s slower (7,045 ns/op) and has larger pre-compression payloads compared to binary formats.

Here’s a quick comparison of key metrics and use cases for each tool:

| Tool | Serialization Speed | Compression Ratio | Integration Complexity | Security Features | Best Use Case |

|---|---|---|---|---|---|

| DreamFactory | Varies by backend | Depends on chosen format | Low (automated) | Built-in enterprise controls (RBAC, API keys, OAuth) | Rapid database API deployment |

| gRPC + Protobuf | 1,827 ns/op | 34% smaller than JSON | Medium | TLS, OAuth, API keys | High-performance microservices |

| Apache Avro | Moderate | High compression | High (big data focus) | Schema validation, ACLs | Data analytics pipelines |

| MessagePack | Fast | Efficient payload size | Medium | Security measures must be externally implemented | Performance-critical applications |

| JSON + Compression | 7,045 ns/op | 60–90% reduction | Low | HTTPS, OAuth, API keys | Public APIs, web services |

When it comes to security, DreamFactory leads with its enterprise-grade features, acting as a governed API layer where all actions are monitored and controlled. Binary formats like Protobuf and Avro typically rely on transport-layer security and application-level measures, while JSON implementations depend on HTTPS and additional middleware for advanced security.

How to decide? It all comes down to your priorities. If performance is key, binary formats like gRPC and MessagePack are solid choices. For quick deployment and strong security, DreamFactory is a clear winner. JSON with compression works best for compatibility, while Avro is tailored for evolving data analytics needs.

Conclusion

Selecting the best API compression and serialization tool depends on how well it aligns with your specific needs in terms of performance, security, and deployment speed. Here's a quick recap of some standout options and their strengths:

DreamFactory stands out for rapid deployment and enterprise-grade security. Its ability to instantly generate APIs from databases, combined with features like RBAC, OAuth, and API key management, makes it a go-to choice for organizations seeking secure and governed API layers without delay.

For performance-critical scenarios, tools like Protocol Buffers paired with gRPC shine. They can reduce message sizes by up to 34% while delivering exceptional speed, making them ideal for real-time systems, AI tasks, and microservices where every millisecond matters.

In big data ecosystems, Apache Avro excels with its schema evolution capabilities and compact storage. It integrates seamlessly with platforms like Hadoop, Kafka, and Spark, making it a reliable choice for data analytics pipelines requiring long-term compatibility.

For memory-constrained environments, MessagePack offers a practical solution. It trims document size by about 15% compared to JSON while improving memory efficiency. This makes it particularly useful for mobile and IoT applications where resources are limited.

When universal compatibility is a priority, JSON remains the top contender. Combined with compression methods like GZIP or Brotli, it reduces payload size while retaining human readability, which is invaluable for debugging and development. This makes JSON an excellent option for public APIs and web services that require broad client support.

To make the most informed choice, it's essential to test these tools with your actual data and workloads rather than relying solely on theoretical metrics. Regularly monitor API performance, keeping an eye on network latency, CPU usage, and system architecture. Adjust compression levels as needed to strike the right balance between efficiency and performance.

Lastly, always implement compression over HTTPS to safeguard against security vulnerabilities. Choose tools that align with your team's expertise and long-term maintenance capabilities. By doing so, you'll ensure your API solution delivers both operational efficiency and strong security.

FAQs

How does DreamFactory make API creation easier than traditional serialization methods?

DreamFactory takes the hassle out of API creation by automating the process, allowing users to generate secure REST APIs in seconds - no coding required. It works by automatically mapping your database schema to an API schema, making it simple to connect with your data sources.

Security is a top priority, and DreamFactory offers built-in features like role-based access control (RBAC), API key management, and various authentication methods to protect your APIs with minimal effort. Plus, its user-friendly interface and support for server-side scripting let you easily customize APIs and add business logic. This approach not only saves time but also eliminates much of the complexity associated with traditional serialization tools.

What makes gRPC with Protocol Buffers a great choice for real-time applications?

gRPC, when combined with Protocol Buffers, is a powerful solution for real-time applications thanks to its low latency, high efficiency, and strong data schema validation. This pairing enables quick and dependable communication, even in intricate systems.

Protocol Buffers handle serialization efficiently, keeping payload sizes small. This reduces bandwidth usage and accelerates data transfer. Additionally, gRPC’s support for bi-directional streaming boosts performance, making it ideal for use cases such as live updates, IoT devices, or collaborative platforms.

When is Apache Avro the best choice for data serialization and compression?

Apache Avro stands out as an excellent option for data serialization and compression, especially when you need flexible schema management, high-speed performance, and compact binary data. It shines in scenarios like real-time data streaming, big data workflows, and situations where bandwidth or storage resources are tight.

One of Avro's key strengths is its ability to work seamlessly with evolving schemas. This makes it a reliable choice for systems where data structures might change over time, ensuring smooth compatibility without disrupting existing operations. On top of that, its lightweight format keeps overhead low, making it a go-to solution for environments where speed and efficiency are non-negotiable.

Related Blog Posts

Kevin Hood is an accomplished solutions engineer specializing in data analytics and AI, enterprise data governance, data integration, and API-led initiatives.

Blog

Blog