Data extraction is critical in 2025 as companies handle massive data volumes, expected to reach 175 zettabytes globally. Here's a quick breakdown of the best methods to extract, manage, and analyze data effectively:

- API Data Extraction: Ideal for structured data. Tools like DreamFactory simplify integration and offer real-time access to databases.

- Web Data Collection: Use AI-powered web scraping for dynamic, semi-structured data with improved accuracy and compliance (e.g., Oxylabs, Scrapfly).

- ETL Systems: Manage and transform diverse data sources with tools like Apache NiFi, Airbyte, and dbt for batch processing and scalability.

- Machine Learning (ML) Extraction: Best for unstructured data like documents. Combines OCR and NLP for 98-99% accuracy.

Quick Comparison Table

| Method | Speed | Setup Complexity | Data Type Support | Scalability |

|---|---|---|---|---|

| API Extraction | Real-time | Moderate | Structured | High |

| Web Scraping | Variable | High | Semi-structured | Moderate |

| ETL Systems | Batch | High | All Types | High |

| ML Extraction | Fast (minutes) | Variable | Unstructured | High |

Key takeaway: Choose API for structured data, AI scraping for market insights, ETL for consistency, and ML for unstructured data. Combining these methods ensures efficient, scalable, and compliant data pipelines in 2025.

Web Scraping & Data APIs: What They Are & How to Use Them

1. API Data Extraction

API-based data extraction offers a direct and reliable way to access structured data from various sources.

Take DreamFactory, for instance. It can create REST APIs from any database in just 5 minutes, significantly reducing development time. A practical example? Back in March 2023, Deloitte used DreamFactory to simplify ERP integration, enabling real-time dashboard access.

With roughly 90% of organizational data being unstructured, having tools to extract and standardize data is a game-changer. Modern API extraction tools bring several advantages:

| Feature | Benefit | Impact |

|---|---|---|

| Automated Generation | Quick 5-minute API setup | Speeds up API deployment processes |

| Security Controls | Built-in RBAC & OAuth 2.0 | Reduces common security risks by 99% |

| Database Connectivity | 20+ database connectors | Combines multiple sources into one REST endpoint |

| Format Standardization | Consistent JSON/XML output | Eases integration and analysis tasks |

"DreamFactory streamlines everything and makes it easy to concentrate on building your front end application"

This quote highlights the growing industry preference for automated API tools that cut down on manual coding.

Security Is Key in API Data Extraction

When it comes to APIs, keeping data secure is non-negotiable. Here's how organizations ensure safety:

- Authentication & Authorization: Using OAuth 2.0 and OpenID Connect for secure access.

- Data Protection: HTTPS/TLS encryption and rate limiting safeguard data in transit and prevent misuse.

- Monitoring & Compliance: Automated monitoring and regular audits help maintain compliance and ensure data security.

According to PromptCloud, businesses leveraging API-based extraction can consistently automate the collection of large-scale, real-time data. This is crucial for organizations that rely on timely, accurate data to make informed decisions.

Up next, we’ll dive into web data collection methods that work hand-in-hand with API extraction to expand data sourcing capabilities.

2. Web Data Collection

Modern web data collection is evolving, with AI playing a key role in improving efficiency and accuracy alongside traditional API-driven methods.

AI-Driven Scraping: A Game Changer

AI-powered tools are transforming web scraping. For example, Oxylabs' AI tool increased extraction accuracy by 30%, ensuring reliable data collection even as websites constantly update their structures. This shift highlights how AI is changing the way data is gathered.

Here’s a quick comparison between traditional and AI-driven scraping methods:

| Feature | Traditional Scrapers | AI-Driven Scrapers |

|---|---|---|

| Rules and Flexibility | Fixed rules, less flexible | Adaptive algorithms |

| Data Types | Simple, structured data | Handles complex layouts, multimedia |

| Maintenance | Requires frequent updates | Self-adjusting to changes |

| Cost | Lower upfront costs | Higher initial cost but better long-term value |

Legal Context and Compliance

The Bright Data vs. Meta case in 2024 clarified that scraping public data is allowed if done responsibly. However, staying compliant with regulations is essential:

"Web scraping is not illegal, but it has been regulated in the past 10 years via privacy regulations which limit the crawl rate for a website." - Cem Dilmegani, Principal Analyst at AIMultiple

Adhering to privacy laws like GDPR and CCPA is non-negotiable for ethical web scraping.

Cutting-Edge Tools and Trends

Tools like Scrapfly's AI Web Scraping API are leading the way in modern data collection. With over 30,000 developers using it, this tool leverages natural language commands to extract structured data seamlessly.

Julius Černiauskas, CEO of Oxylabs, shares insight into the industry's future:

"Web scraping professionals are generally happy with the results of AI adoption. Thus, we might see a proliferation in AI and ML-based web scraping solutions for target unblocking, proxy management, parsing, and other tasks."

AI and machine learning are driving advancements in areas like proxy management and data parsing, making web scraping more efficient and versatile.

Key Implementation Steps

For organizations planning to implement web scraping in 2025, here are a few considerations:

- Review robots.txt and terms of service: Ensure compliance with website policies.

- Choose the right tools: Decide between traditional scrapers and AI-powered solutions based on the complexity of the data.

- Monitor accuracy: Regularly evaluate extraction performance and fine-tune strategies.

- Stay compliant: Follow GDPR, CCPA, and other applicable regulations.

Cloud-based browsers and multi-agent systems are also enhancing the reliability of web scraping. These technologies mimic human browsing behavior, improving both efficiency and ethical practices.

3. ETL Systems

ETL systems play a crucial role in processing and managing complex workflows, especially after web data collection. These systems are designed to handle intricate tasks efficiently, ensuring seamless data flow and transformation.

Modern ETL Tools Comparison

Today’s ETL ecosystem is shaped by three standout tools, each offering unique features:

| Feature | Apache NiFi | Airbyte | dbt |

|---|---|---|---|

| Primary Focus | Real-time data flow | Data integration | Data transformation |

| Key Strength | Complex routing | 550+ connectors | SQL-based modeling |

| Best Use Case | Stream processing | Source connectivity | Warehouse transformation |

| Scalability | High | Very High | High |

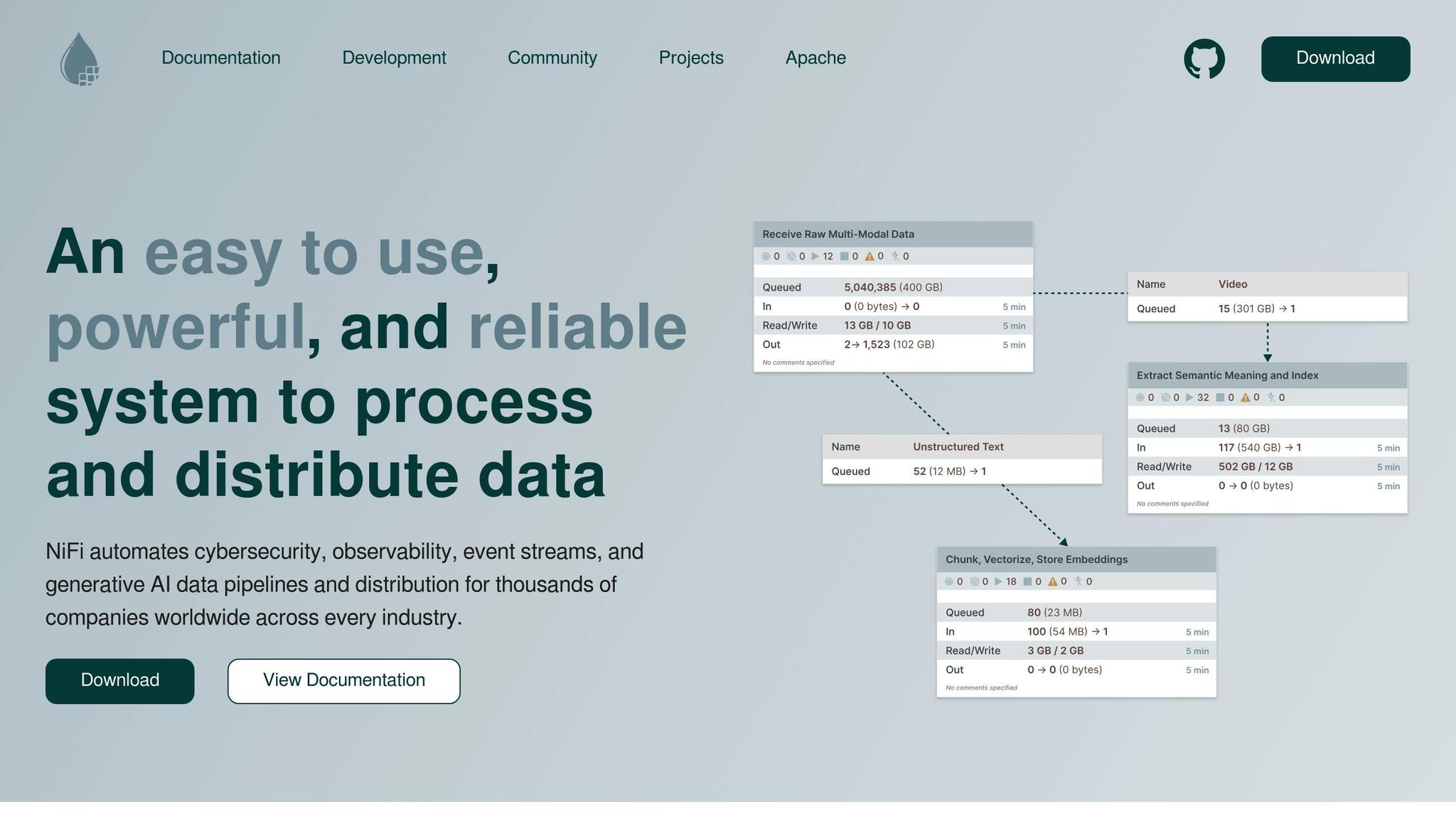

Real-Time Processing with Apache NiFi

Apache NiFi is a standout for managing real-time data flows, thanks to its user-friendly graphical interface. For instance, a financial services company reported a 40% reduction in processing time after adopting NiFi.

"Apache NiFi's ability to manage complex data flows in real-time is essential for organizations that require immediate insights from their data." - NiFi Development Team

Airbyte's Integration Power

Airbyte has redefined data integration with its extensive library of over 550 connectors and modular framework. Its community-driven development model enables rapid innovation. In November 2024, Airbyte showcased major performance gains by introducing parallel processing, allowing tasks to run simultaneously across multiple nodes.

"Airbyte's community-driven approach allows for rapid development and customization of connectors, making it a powerful tool for modern data integration." - Airbyte Team

Optimizing ETL Performance

To ensure ETL systems operate at peak efficiency, several optimization techniques are commonly used:

- Partitioning Data: Enables parallel processing for faster execution.

- Incremental Updates: Processes only new data, reducing overhead.

- Caching Mechanisms: Speeds up retrieval of frequently accessed data.

Integration Best Practices

When deploying ETL systems, consider these best practices for smoother integration and better results:

- Data Cleaning: Remove unnecessary or irrelevant data at the source.

- Automated Quality Checks: Use tools to validate and maintain data consistency.

- Performance Monitoring: Track system metrics to identify and resolve bottlenecks.

Hybrid Approaches

A growing trend among organizations is adopting hybrid strategies - using tools like Airbyte for data extraction and loading, paired with dbt for in-warehouse transformations. This approach takes advantage of each tool’s strengths while offering flexibility in data infrastructure. By separating extraction and transformation tasks, teams can focus on specific needs without compromising efficiency. Tools like dbt, which excel in SQL-based transformations, integrate seamlessly with other ETL components, reinforcing the importance of modularity in modern data workflows. These hybrid solutions effectively bridge the gap between API, web methods, and ETL systems, creating a cohesive data management framework.

4. Machine Learning Extraction

Machine learning (ML) has reshaped how data is extracted, especially from complex and unstructured sources. By combining Optical Character Recognition (OCR) with Natural Language Processing (NLP), ML-driven extraction achieves impressive accuracy and efficiency. Unlike traditional ETL pipelines, ML systems can dynamically process and interpret unstructured data.

How OCR and NLP Work Together

Modern ML systems integrate OCR and NLP to deliver 98-99% accuracy in data extraction, far surpassing manual methods. Here's how these technologies complement each other:

| Feature | OCR Role | NLP Role |

|---|---|---|

| Document Conversion | Converts physical text into digital form | Analyzes context and meaning |

| Data Organization | Maps out text structure and layout | Identifies relationships between elements |

| Accuracy | Achieves near-perfect character recognition | Improves understanding of context |

Real-World Success Stories

In November 2024, Docsumo implemented ML-driven extraction for a leading financial institution, cutting loan application processing time by 40%.

"Machine learning significantly enhances data extraction processes, providing numerous advantages that improve efficiency, accuracy, and adaptability." - KlearStack

Industry-Specific Use Cases

In finance, PwC utilized AI for tax notice processing, reducing time spent by 16% and saving over 5 million hours.

Handling Complex Documents

ML-based systems are particularly effective for processing intricate document types:

- Taggun's Multi-Model Approach: Handles receipts and invoices with high precision, even from low-quality images, by using multiple ML models simultaneously.

- Klippa's DocHorizon: Merges OCR and NLP to automate document workflows, cutting operational costs significantly.

Rapid Market Growth

The NLP market is projected to grow from $8.21 billion in 2024 to $33.04 billion by 2030. These advancements enable scalable automation for a wide range of document types.

Key Benefits for Organizations

Businesses adopting ML-driven extraction often see:

| Benefit | Impact | Example |

|---|---|---|

| Time Savings | Cuts processing time by 30-40% | Financial services |

| Fewer Errors | Achieves 98-99% accuracy | Healthcare records |

| Lower Costs | Reduces operational expenses | Insurance claims |

| Scalability | Handles growing document volumes | Legal sector |

"Basic AI extraction methods can save 30–40% of processing hours." - PwC

With developments like multimodal learning and generative AI, ML systems are becoming even more capable of tackling complex documents. This progress strengthens their role in delivering fast and accurate insights for 2025 and beyond.

Method Comparison

This section breaks down data extraction methods based on performance, ease of setup, and ability to scale.

Core Performance Metrics

| Method | Speed | Setup Complexity | Data Source Support | Processing Power | Scalability |

|---|---|---|---|---|---|

| API Extraction | Real-time | Moderate | Structured | Low | High |

| Web Scraping | Variable | High | Semi-structured | Variable | Moderate |

| ETL Systems | Batch | High | Diverse (all types) | High | High |

| ML Extraction | Fast (minutes) | Variable | Unstructured | High | High |

These metrics come to life when applied to practical use cases.

Real-World Implementation Results

For example, Adverity's implementation automated data workflows, saving over 400 hours annually. Similarly, Spotify leveraged API-based extraction to cut email bounce rates from 12.3% to 2.1% in just 60 days.

Scalability Considerations

Large enterprises often juggle around 400 data sources, making scalability a key factor. Each method has its strengths. Modern tools, particularly those using APIs and webhooks, simplify data workflows and reduce friction.

"The right tool turns data extraction from a development bottleneck into a streamlined operation anyone on your data team can manage." – Authoritative Source

Choosing the best method depends on the complexity of your data and the resources you have available. For structured data, API extraction is reliable and straightforward. On the other hand, ML-based techniques are ideal for unstructured data, processing thousands of documents in minutes - a task that could take days with older methods.

Conclusion

With the ever-growing volume of data, choosing the right extraction method has become more important than ever. Different methods shine in different scenarios: API extraction works best for structured data, AI-enhanced web scraping provides real-time market insights, ETL systems ensure consistency across varied sources, and ML-driven techniques handle unstructured data with finesse. Together, these methods help create efficient, integrated data pipelines that can meet changing business needs.

Web scraping plays a key role in competitive intelligence, helping e-commerce businesses implement dynamic pricing strategies to boost profits. Tools like Hevo Data and Fivetran ensure ETL systems maintain consistency and compliance. Meanwhile, AI-based methods revolutionize unstructured data processing, and APIs remain the go-to for structured data extraction.

"AI must be part of the data strategy for 2025 - whether for personalized customer engagement or predictive maintenance"

Emerging trends reinforce the importance of these approaches. Here's a quick look:

| Trend | Impact | Timeline |

|---|---|---|

| Real-time Analytics | Profitability up by 5–6% | Immediate |

| AI Automation | Processing speeds 10× faster | 2025–2026 |

| Privacy Compliance | Stronger data governance | Ongoing |

The data analytics market is expected to hit $132.9 billion by 2026, highlighting the need for well-thought-out extraction strategies.

"AI-powered data extraction tools use machine learning and natural language processing to handle complex datasets with precision and speed"

To succeed in 2025 and beyond, organizations must combine these methods wisely. Building scalable, compliant data pipelines that align with both technical requirements and the complexity of their data will be critical.

Related Blog Posts

- Choosing the Right Snowflake Integration: DreamFactory, APIs, ETL, or Data Sharing?

- AI Code Editors vs. API Generation Platforms: Choosing the Right Tool

- How AI’s API Boom in 2025 Reinforces the Need for Automated API Generation

- LLM Data Gateways: Bridging the Gap Between Raw Data and Enterprise-Ready AI

Kevin Hood is an accomplished solutions engineer specializing in data analytics and AI, enterprise data governance, data integration, and API-led initiatives.

Blog

Blog