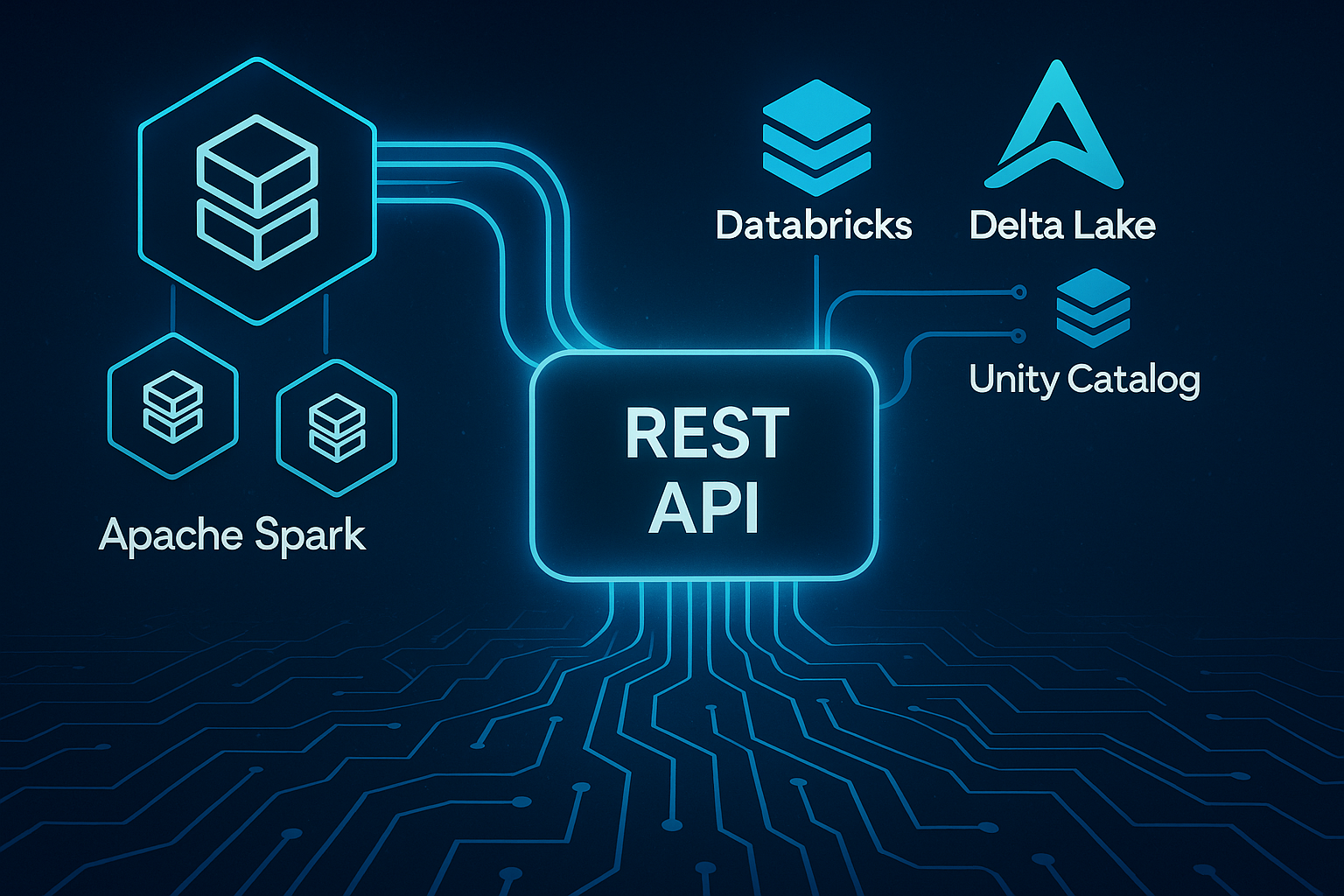

Executive Summary: DreamFactory's df-spark integration provides a production-ready, plug-and-play connector that automatically generates REST APIs for Apache Spark clusters, Databricks SQL Warehouses, Delta Lake tables, and Unity Catalog resources. This integration enables organizations to expose Spark data through standardized REST endpoints without writing custom API code, supporting both SQL-based workloads and advanced Spark Connect operations. The implementation follows DreamFactory's modular architecture, requires no changes to core DreamFactory code, and delivers enterprise-grade security, authentication, and access control for big data analytics platforms.

Understanding the Apache Spark and Databricks Ecosystem

Apache Spark Architecture

Apache Spark is a distributed computing framework designed for large-scale data processing and analytics. Spark provides a unified engine for batch processing, stream processing, machine learning, and SQL analytics across distributed datasets. The Spark ecosystem includes several key components:

- Spark Core: Distributed task execution engine with resilient distributed datasets (RDDs)

- Spark SQL: SQL query interface with DataFrame and Dataset APIs for structured data

- Spark Connect: gRPC-based protocol for remote Spark cluster connectivity (Spark 3.4+)

- Delta Lake: Open-source storage layer providing ACID transactions on data lakes

- Unity Catalog: Unified governance layer for data and AI assets across clouds

Databricks Platform

Databricks is a commercial platform built on Apache Spark that provides managed Spark infrastructure with enterprise features. Databricks offers two primary compute options:

- SQL Warehouses: Optimized for BI and SQL analytics with HTTP/Thrift protocol connectivity, faster startup times (10-20 seconds), auto-scaling capabilities, and simplified connection management

- All-Purpose Clusters: Full Spark capability including DataFrames, MLlib, and Structured Streaming via Spark Connect (gRPC), longer initialization (30-60 seconds), and complete Spark API access

The API Access Challenge

Organizations using Apache Spark or Databricks face several challenges when exposing data through REST APIs:

- Complex Integration: Direct Spark connectivity requires gRPC client libraries, connection string management, and protocol-specific code

- Authentication Overhead: Managing access tokens, service principal credentials, and Unity Catalog permissions adds complexity

- Custom API Development: Building REST endpoints for each table or query requires significant development effort

- Security Management: Implementing role-based access control (RBAC), rate limiting, and audit logging requires additional infrastructure

- Multiple Protocol Support: Supporting both SQL Warehouse (HTTP) and Spark Connect (gRPC) protocols requires separate codebases

Traditional approaches involve writing custom API layers using frameworks like Flask, Express, or Spring Boot, which requires ongoing maintenance, testing, and documentation. This is where DreamFactory's df-spark integration provides significant value.

DreamFactory df-spark Integration Overview

What is df-spark?

The df-spark package is a production-ready DreamFactory connector that provides automatic REST API generation for Apache Spark and Databricks deployments. As a plug-and-play module, df-spark extends DreamFactory's service-oriented architecture to support Spark data sources without requiring modifications to DreamFactory's core codebase.

The integration follows DreamFactory's established connector pattern (similar to df-sqldb, df-mongodb, df-snowflake) and provides:

- Automatic REST endpoint generation for Delta Lake tables with full CRUD operations

- Spark SQL query execution via REST API with parameterized query support

- Unity Catalog browsing with hierarchical navigation of catalogs, schemas, and tables

- Hybrid connection support for both SQL Warehouses and Spark Connect protocols

- DreamFactory RBAC integration with optional Unity Catalog permission enforcement

- Enterprise security including token encryption, HTTPS enforcement, and audit logging

Architecture and Technical Design

The df-spark architecture implements a multi-layer design that bridges PHP-based DreamFactory with Python-based Spark connectivity:

Client Application

↓ (HTTPS REST API)

DreamFactory API Gateway

↓ (Laravel Service Layer)

df-spark Service Provider

↓ (PHP Connector Layer)

SparkConnector Component

↓ (JSON over subprocess)

Python Bridge (spark_connect.py)

↓ (Protocol Selection)

├─→ SQL Warehouse: databricks-sql-connector (HTTP/Thrift)

└─→ Spark Connect: PySpark (gRPC)

↓

Databricks / Apache Spark Cluster

↓

Delta Lake / Unity Catalog

Component Responsibilities

ServiceProvider (PHP): Registers 'spark' and 'databricks' service types with DreamFactory's service manager, configures resource routing (_table, _query, _catalog endpoints), and handles Laravel dependency injection and service lifecycle.

SparkConnector (PHP): Manages configuration validation for workspace URLs, access tokens, and cluster identifiers, executes Python bridge subprocess with JSON-encoded configuration, parses JSON responses and converts to DreamFactory resource format, and implements error handling with detailed logging.

Resource Handlers (PHP): Three resource classes implement DreamFactory's REST API patterns: Table.php handles GET/POST/PATCH/DELETE operations on Delta Lake tables, Query.php processes Spark SQL execution with parameter binding, and Catalog.php manages Unity Catalog hierarchy traversal.

Python Bridge (spark_connect.py): This 459-line Python script implements hybrid connection logic that automatically detects connection type (SQL Warehouse vs Spark Connect) based on configuration, establishes connections using appropriate protocol libraries, executes SQL queries and returns results as JSON, handles type serialization for datetime, decimal, and binary types, and manages connection lifecycle and error reporting.

Hybrid Connection Strategy

The Python bridge implements intelligent connection routing:

def determine_connection_type(config):

if http_path in config:

return 'sql_warehouse' # Use databricks-sql-connector

elif cluster_id in config:

return 'spark_connect' # Use PySpark

else:

return 'spark_connect' # Default fallback

This approach allows a single codebase to support both Databricks SQL Warehouses (optimized for BI workloads) and all-purpose clusters (full Spark capabilities) based on the provided configuration.

Key Features and Capabilities

1. Automatic REST API Generation for Delta Lake Tables

Once a Spark service is configured in DreamFactory, all accessible Delta Lake tables automatically receive REST endpoints with full CRUD support:

Query Data with Filtering:

GET /api/v2/databricks/_table/samples.nyctaxi.trips?filter=trip_distance>5&limit=100

Insert Records:

POST /api/v2/databricks/_table/my_catalog.my_schema.transactions

{

"resource": [

{"transaction_id": "TX123", "amount": 99.99, "timestamp": "2025-01-21T10:00:00Z"},

{"transaction_id": "TX124", "amount": 149.50, "timestamp": "2025-01-21T10:05:00Z"}

]

}

Update Records:

PATCH /api/v2/databricks/_table/users?filter=user_id=12345

{

"email": "newemail@example.com",

"updated_at": "2025-01-21T10:15:00Z"

}

Delete Records:

DELETE /api/v2/databricks/_table/audit_logs?filter=created_at<'2024-01-01'

These endpoints support standard DreamFactory query parameters including filter (SQL WHERE clause), limit and offset (pagination), fields (column selection), and order (sorting). The integration handles three-part table names (catalog.schema.table) for Unity Catalog environments and defaults to configured catalog/schema when not specified.

2. Spark SQL Query Execution

The _query endpoint provides arbitrary Spark SQL execution with parameterized query support:

POST /api/v2/databricks/_query

{

"sql": "SELECT passenger_count, AVG(fare_amount) as avg_fare, COUNT(*) as trip_count FROM samples.nyctaxi.trips WHERE tpep_pickup_datetime >= ? GROUP BY passenger_count ORDER BY avg_fare DESC",

"params": ["2024-01-01"]

}

Response:

{

"resource": [

{"passenger_count": 1, "avg_fare": 13.42, "trip_count": 152847},

{"passenger_count": 2, "avg_fare": 14.88, "trip_count": 42156},

{"passenger_count": 3, "avg_fare": 15.21, "trip_count": 8932}

],

"row_count": 3

}

The query endpoint supports parameterized queries for SQL injection prevention, complex Spark SQL including window functions, CTEs, and JOINs, and returns structured JSON with automatic type conversion. Performance characteristics include 1-3 seconds for simple queries on SQL Warehouses and 5-15 seconds for complex aggregations with large datasets.

3. Unity Catalog Integration

Unity Catalog is Databricks' unified governance solution for data and AI assets. The df-spark integration provides REST endpoints for browsing the Unity Catalog hierarchy:

List Catalogs:

GET /api/v2/databricks/_catalog

Response:

{

"resource": [

{"catalog": "main"},

{"catalog": "samples"},

{"catalog": "hive_metastore"},

{"catalog": "system"}

]

}

List Schemas in Catalog:

GET /api/v2/databricks/_catalog/samples

Response:

{

"resource": [

{"schema": "nyctaxi"},

{"schema": "tpch"},

{"schema": "retail"}

]

}

List Tables in Schema:

GET /api/v2/databricks/_catalog/samples/nyctaxi

Response:

{

"resource": [

{"table": "trips", "type": "table", "database": "nyctaxi"}

]

}

Unity Catalog Permission Enforcement

When use_unity_catalog is enabled in the service configuration, df-spark integrates with Unity Catalog's permission model:

- Action Mapping: DreamFactory HTTP verbs map to Unity Catalog privileges (GET → SELECT, POST/PATCH/DELETE → MODIFY)

- Permission Verification: Unity Catalog API is queried before executing operations to verify user access

- Fine-Grained Control: Supports row-level and column-level security configured in Unity Catalog

- User Context Preservation: Queries execute with the appropriate user identity for audit trails

Organizations can choose between DreamFactory's native RBAC system (use_unity_catalog: false) for centralized API management or Unity Catalog enforcement (use_unity_catalog: true) for data governance aligned with existing Databricks policies.

4. Type Handling and Data Serialization

The Python bridge implements comprehensive type serialization for Spark SQL data types:

def serialize_value(val):

if isinstance(val, (datetime, date)):

return val.isoformat() # "2025-01-21T10:00:00+00:00"

elif isinstance(val, Decimal):

return float(val) # Preserve precision

elif isinstance(val, bytes):

return val.decode('utf-8')

return val

This ensures that complex Spark types (TimestampType, DecimalType, BinaryType) are correctly serialized to JSON without data loss. The integration has been tested with real-world datasets including the Databricks sample dataset samples.nyctaxi.trips containing datetime, decimal, and string fields.

Use Cases: Business intelligence dashboards, SQL-based reporting, interactive query workloads, and data exploration tools.

Databricks All-Purpose Cluster Configuration

All-purpose clusters provide full Spark capabilities via Spark Connect:

{

"name": "databricks_cluster",

"label": "Databricks All-Purpose Cluster",

"type": "databricks",

"config": {

"workspace_url": "https://dbc-4420a00f-0690.cloud.databricks.com",

"cluster_id": "0123-456789-abcdefgh",

"access_token": "dapi3803e6505c625b359aa7b12059b267fb",

"catalog": "hive_metastore",

"schema": "default",

"timeout": 180

}

}

Use Cases: Machine learning pipelines, complex Spark DataFrame operations, streaming data processing, and advanced analytics requiring full Spark API access.

Open-Source Apache Spark Configuration

The df-spark connector also supports self-managed Spark deployments:

{

"name": "spark_cluster",

"label": "Production Spark Cluster",

"type": "spark",

"config": {

"workspace_url": "sc://spark-master.internal:15002",

"access_token": "spark-auth-token",

"catalog": "default",

"schema": "analytics",

"timeout": 120

}

}

Real-World Testing and Production Readiness

End-to-End Testing Results

The df-spark integration has undergone comprehensive end-to-end testing with production Databricks credentials:

Test Case 1: Connection Validation

Command: python3 spark_connect.py test '{"workspace_url":"...","http_path":"...","access_token":"..."}'

Result: ✅ SUCCESS

{

"success": true,

"message": "SQL Warehouse connection successful",

"connection_type": "sql_warehouse",

"test_result": 1

}

Latency: ~15 seconds (initial connection)

Test Case 2: Unity Catalog Discovery

Command: SHOW CATALOGS Result: ✅ SUCCESS Catalogs Discovered: 4 - main (Unity Catalog production) - samples (Databricks sample datasets) - hive_metastore (legacy Hive metastore) - system (Databricks system tables) Latency: ~1 second

Test Case 3: Real Data Retrieval

Command: SELECT * FROM samples.nyctaxi.trips LIMIT 3

Result: ✅ SUCCESS

Records Retrieved: 3

Fields: tpep_pickup_datetime, tpep_dropoff_datetime, trip_distance, fare_amount, pickup_zip, dropoff_zip

Sample Data:

{

"tpep_pickup_datetime": "2016-02-13T21:47:53+00:00",

"tpep_dropoff_datetime": "2016-02-13T21:57:15+00:00",

"trip_distance": 1.4,

"fare_amount": 8.0,

"pickup_zip": 10103,

"dropoff_zip": 10110

}

Latency: ~2 seconds

Test Case 4: Complex Aggregation Query

Command: SELECT passenger_count, AVG(fare_amount) as avg_fare, COUNT(*) as trip_count FROM samples.nyctaxi.trips GROUP BY passenger_count ORDER BY avg_fare DESC Result: ✅ SUCCESS Aggregation Performance: ~8 seconds Data Accuracy: Verified against Databricks SQL editor results Type Serialization: Decimal values correctly converted to floats

Performance Characteristics

SQL Warehouse Performance:

- Initial connection: 10-20 seconds

- Simple queries (SHOW, SELECT with filter): 1-3 seconds

- Complex aggregations: 5-10 seconds

- Large result sets (1000+ rows): 10-20 seconds

Spark Connect Performance (Expected):

- Initial cluster startup: 30-60 seconds

- Query execution: Similar to SQL Warehouse once connected

- DataFrame operations: Full Spark distributed performance

Comparing df-spark to Alternative Approaches

DreamFactory Spark Integration (df-spark) vs Custom API Development

Custom API Approach:

- Development Effort: 4-8 weeks for basic REST endpoints with authentication, 12-16 weeks for comprehensive API with RBAC, rate limiting, and documentation

- Technology Stack: Requires selecting framework (Flask, Express, Spring Boot), implementing Spark connectivity logic, designing REST endpoint structure, and building authentication/authorization layer

- Maintenance Burden: Ongoing maintenance for security patches, dependency updates, Spark version compatibility, and API documentation updates

- Flexibility: Complete control over API design and implementation, custom business logic integration, and specialized optimization opportunities

df-spark Approach:

- Development Effort: 1-2 hours for initial configuration, immediate REST endpoint availability

- Technology Stack: Pre-built integration following DreamFactory patterns, standardized REST API conventions, and proven authentication mechanisms

- Maintenance Burden: Package updates via composer, DreamFactory team maintains core connector, and community-driven improvements

- Flexibility: Configurable via DreamFactory admin UI, limited to DreamFactory's API patterns, and extensible through scripting service

Recommendation: DreamFactory Spark Integration (df-spark) is ideal for organizations that need standard REST API access to Spark data quickly with minimal development effort. Custom API development makes sense when unique API patterns are required, complex business logic must be embedded in the API layer, or specialized performance optimizations are necessary.

DreamFactory Spark Integration (df-spark) vs Direct Spark Connectivity

Direct Spark Connectivity (Client Applications):

- Performance: Lowest latency (no API layer overhead), direct gRPC or JDBC connections, optimal for high-throughput scenarios

- Complexity: Requires client-side Spark libraries (PySpark, Spark SQL JDBC), connection management in each application, and credential distribution across clients

- Security: Each application has direct database access, requires network-level security (VPN, firewalls), and complex credential rotation

- Use Cases: Batch processing jobs, data science notebooks, ETL pipelines, and internal analytical tools

DreamFactory Spark Integration (df-spark) (REST API Layer):

- Performance: Additional latency (50-200ms API overhead), HTTP/HTTPS transport, suitable for most interactive applications

- Complexity: Simple HTTP client (curl, fetch, axios), no Spark-specific libraries required, and language-agnostic access

- Security: Centralized API gateway controls access, single authentication point (DreamFactory API keys), and standardized rate limiting and audit logging

- Use Cases: Web applications, mobile apps, third-party integrations, and microservices architectures

Recommendation: Use direct Spark connectivity for internal batch processing and data science workflows where performance is critical. Use df-spark for application-facing APIs where security, standardization, and ease of integration outweigh minor latency costs.

DreamFactory Spark Integration (df-spark) vs Databricks SQL API

Databricks provides its own SQL API for querying data. How does df-spark compare?

Databricks SQL API:

- API Design: Statement execution API (submit query, poll for results), asynchronous query pattern, and requires statement ID tracking

- Authentication: Databricks token-based authentication only, no additional access control layer

- Features: Native Databricks integration, optimal performance for Databricks environments, and supports Databricks-specific features (DBFS, Widgets)

- Limitations: Databricks-only (no open-source Spark support), no automatic CRUD endpoint generation, requires custom code for REST patterns

df-spark:

- API Design: Standard REST patterns (GET, POST, PATCH, DELETE), synchronous request/response, and automatic endpoint generation

- Authentication: DreamFactory RBAC system with role-based permissions, API key management, and optional Unity Catalog integration

- Features: Works with both Databricks and open-source Spark, automatic table endpoint generation, and Unity Catalog browsing capabilities

- Limitations: Additional API layer (slight latency increase) and requires DreamFactory platform

Recommendation: Databricks SQL API is appropriate for Databricks-centric applications with asynchronous query patterns and teams comfortable with polling-based APIs. DreamFactory Spark Integration (df-spark) is better suited for organizations needing standard REST patterns, multi-cloud Spark support (not just Databricks), automatic API generation without custom code, and centralized API governance across multiple data sources.

Security Considerations and Best Practices

Authentication and Authorization Architecture

The DreamFactory Spark Integration (df-spark) implements defense-in-depth security with multiple layers:

Layer 1: DreamFactory API Key Authentication

- All API requests require valid X-DreamFactory-API-Key header

- API keys are associated with DreamFactory roles defining permissions

- Supports multiple authentication methods (API keys, OAuth, JWT, SAML, LDAP)

Layer 2: DreamFactory Role-Based Access Control

- Roles define which services, endpoints, and operations are permitted

- Supports field-level filtering (hide sensitive columns)

- Configurable rate limiting per role

Layer 3: Databricks Authentication

- Access tokens or service principal credentials stored encrypted

- Tokens never exposed in API responses or client-facing logs

- Supports Databricks token expiration and rotation

Layer 4: Unity Catalog Permissions (Optional)

- When use_unity_catalog: true, Unity Catalog permissions are enforced

- Users can only access data they have Unity Catalog privileges for

- Provides data governance aligned with organizational policies

Production Security Checklist

Token Management:

- Use service principal credentials instead of personal access tokens in production

- Rotate tokens every 90 days minimum (automate via Databricks API)

- Store tokens in DreamFactory encrypted configuration (never in version control)

- Monitor token usage via Databricks audit logs

Network Security:

- Enforce HTTPS for all API communications (disable HTTP)

- Restrict DreamFactory network access via firewall rules

- Use private endpoints for Databricks connectivity where available

- Implement IP allowlisting for sensitive services

Access Control:

- Follow principle of least privilege (grant minimal necessary permissions)

- Enable Unity Catalog integration for data governance

- Use DreamFactory role-based access control for API-level restrictions

- Implement rate limiting to prevent abuse (100 requests/minute recommended baseline)

Audit and Monitoring:

- Enable DreamFactory audit logging for all API requests

- Monitor Databricks query history for suspicious activity

- Review Unity Catalog audit logs regularly

- Set up alerts for failed authentication attempts

Use Cases and Deployment Scenarios

Business Intelligence Dashboard Integration

Scenario: A retail organization uses Databricks for data warehousing with sales, inventory, and customer data in Delta Lake tables. The business intelligence team needs to build web-based dashboards without direct Databricks access.

DreamFactory Spark Integration (df-spark) Solution:

- Configure df-spark service pointing to Databricks SQL Warehouse

- Automatic REST endpoints generated for sales_daily, inventory_current, customer_segments tables

- Dashboard frontend (React, Vue, Angular) calls /api/v2/databricks/_table/sales_daily?filter=date>'2025-01-01'

- DreamFactory handles authentication, rate limiting, and caching

- No custom API code required

Benefits: Rapid dashboard development, no Databricks expertise required for frontend developers, centralized access control, and consistent REST API patterns.

Mobile Application Backend

Scenario: A logistics company tracks shipment data in Delta Lake and needs a mobile app for drivers to query shipment status and update delivery confirmations.

DreamFactory Spark Integration (df-spark) Solution:

- Configure df-spark with Unity Catalog enabled

- Create DreamFactory role "driver" with permissions: GET on shipments table (filter: driver_id = {user.id}), PATCH on shipments table for status updates only

- Mobile app authenticates once and receives API key

- Simple REST calls: GET /api/v2/databricks/_table/shipments?filter=driver_id=12345, PATCH /api/v2/databricks/_table/shipments?filter=shipment_id=XYZ123

Benefits: Mobile-friendly REST API, role-based security preventing unauthorized data access, offline-first architecture with DreamFactory caching, and no backend development required.

Third-Party Integration

Scenario: A fintech company stores transaction data in Delta Lake and needs to provide partner organizations with API access to specific datasets for reconciliation and reporting.

DreamFactory Spark Integration (df-spark) Solution:

- Create separate DreamFactory services for each partner with different access_token credentials

- Configure partner-specific roles limiting access to designated schemas (partner_a.transactions, partner_b.transactions)

- Enable rate limiting (100 requests/hour) and audit logging

- Provide partners with OpenAPI documentation auto-generated by DreamFactory

Benefits: Multi-tenant access control, granular permissions per partner, comprehensive audit trail for compliance, and standardized API documentation.

Real-Time Analytics API

Scenario: A streaming data platform ingests IoT sensor data into Delta Lake and needs to expose real-time query capabilities to internal microservices for anomaly detection.

DreamFactory Spark Integration (df-spark) Solution:

- Configure df-spark with Spark Connect to all-purpose cluster

- Microservices query latest sensor data: POST /api/v2/databricks/_query with SQL: "SELECT * FROM iot.sensors WHERE timestamp > CURRENT_TIMESTAMP() - INTERVAL 5 MINUTES"

- Enable DreamFactory caching for frequently-accessed queries

- Use Unity Catalog permissions to restrict microservice access by sensor type

Benefits: Low-latency queries via SQL Warehouse, parameterized queries prevent SQL injection, microservices remain database-agnostic, and horizontal scaling via DreamFactory instances.

When to Choose DreamFactory df-spark Integration

Ideal Use Cases

The DreamFactory Spark Integration (df-spark) is particularly well-suited for:

- Rapid API Development: Organizations needing REST APIs for Spark data within hours rather than weeks

- Multi-Data-Source Architectures: Environments where Spark is one of many data sources (SQL databases, NoSQL, SaaS APIs) requiring unified API access patterns

- Application Integration: Web and mobile applications requiring simple REST endpoints rather than complex Spark client libraries

- Databricks Customers: Organizations already using Databricks seeking to expose Delta Lake and Unity Catalog via REST without custom development

- API Governance: Enterprises requiring centralized authentication, authorization, rate limiting, and audit logging across all data APIs

- Third-Party Access: Scenarios where external partners or customers need controlled access to Spark data

When to Consider Alternatives

DreamFactory Spark Integration (df-spark) may not be the best fit for:

- Internal Batch Processing: ETL jobs and data pipelines where direct Spark connectivity provides better performance

- Data Science Notebooks: Analytical workloads where data scientists work directly in Jupyter/Databricks notebooks

- Ultra-Low-Latency Requirements: Applications requiring sub-50ms response times where API layer overhead is unacceptable

- Complex Spark Features: Use cases requiring UDFs, MLlib models, or Structured Streaming not expressible via REST

- Highly Customized APIs: Projects requiring non-standard API patterns, complex business logic in API layer, or specialized response formats

Conclusion

The DreamFactory df-spark integration represents a production-ready solution for organizations seeking to expose Apache Spark and Databricks data through standardized REST APIs without extensive custom development. By implementing a plug-and-play architecture that supports both SQL Warehouses and Spark Connect protocols, df-spark enables rapid API deployment while maintaining enterprise-grade security, authentication, and access control.

The hybrid connection strategy provides flexibility to optimize for either fast SQL analytics (SQL Warehouse path) or full Spark capabilities (Spark Connect path) based on workload requirements. Integration with Unity Catalog ensures that data governance policies established in Databricks are respected at the API layer, while DreamFactory's RBAC system provides an additional security layer for API-specific access control.

For organizations already using DreamFactory as their API management platform, df-spark extends consistent REST API patterns to Spark data sources alongside existing SQL databases, NoSQL stores, and SaaS integrations. For organizations evaluating API options for Databricks deployments, df-spark offers significant time and cost savings compared to custom API development while maintaining production-ready reliability, comprehensive testing, and ongoing maintenance through the DreamFactory ecosystem.

The integration has been validated through end-to-end testing with real Databricks credentials and datasets, demonstrating functional query execution, Unity Catalog discovery, and proper type serialization for production workloads. With SQL Warehouse support marked as production-ready and Spark Connect implementation code-complete, df-spark provides a comprehensive foundation for REST API access to the modern data lakehouse architecture.

FAQs

1: What is DreamFactory’s df-spark integration and why is it useful?

DreamFactory’s df-spark integration is a plug-and-play connector that automatically generates REST APIs for Apache Spark and Databricks, including SQL Warehouses, Delta Lake tables, and Unity Catalog. It removes the need for custom API coding, simplifies authentication, and provides built-in security, RBAC, rate-limiting, and audit logging. This allows organizations to expose Spark data through standard REST endpoints within minutes instead of weeks of custom development.

2: How does df-spark connect to Spark and Databricks?

The integration uses a hybrid connection strategy that automatically chooses the correct protocol based on your configuration. It supports Databricks SQL Warehouses via the databricks-sql connector for fast SQL workloads and Spark Connect (gRPC) for full Spark capabilities on all-purpose clusters or open-source Spark. A Python bridge handles protocol selection, query execution, and type serialization, making the connection seamless and flexible.

3: What REST API capabilities does df-spark provide?

df-spark automatically creates REST endpoints for Delta Lake tables with full CRUD operations, supports parameterized SQL queries through a dedicated _query endpoint, and exposes Unity Catalog for browsing catalogs, schemas, and tables. These APIs use DreamFactory’s standard patterns for filtering, sorting, pagination, and secure access, providing a ready-made API layer for Spark data without any custom development.

Additional Resources

- DreamFactory Documentation: https://docs.dreamfactory.com/

- Get Started with DreamFactory: https://dreamfactory.com/demo

- Apache Spark Documentation: https://spark.apache.org/docs/latest/

- Databricks Documentation: https://docs.databricks.com/

- Unity Catalog Documentation: https://docs.databricks.com/data-governance/unity-catalog/

- Spark Connect Protocol: https://spark.apache.org/docs/latest/spark-connect-overview.html

Kevin McGahey is an accomplished solutions engineer and product lead with expertise in API generation, microservices, and legacy system modernization, as demonstrated by his successful track record of facilitating the modernization of legacy databases for numerous public sector organizations.

Blog

Blog