Cross-database queries with REST APIs make it easier to access and analyze data stored in multiple databases without physically moving it. Here's why this matters:

- What it Does: It allows you to query different databases (e.g., PostgreSQL, MongoDB, MySQL) through a single interface.

- Why it Works: REST APIs provide a secure and standardized way to connect databases, enabling seamless data integration.

-

Key Benefits:

- Simplifies data access across systems.

- Reduces the need for complex ETL pipelines.

- Supports real-time analytics and secure data access.

Challenges include performance issues, data format inconsistencies, and security risks. But with proper setup - secure connections, schema mapping, and optimized REST endpoints - you can overcome these hurdles.

Tools like DreamFactory automate API generation, saving time and ensuring security with features like role-based access control and server-side scripting.

If you're managing data across multiple databases, cross-database queries combined with REST APIs can streamline operations and improve efficiency.

Setup a Database API Service for SQL DBs | Read, Write, Query

Setting Up Cross-Database Queries with REST APIs

Setting up cross-database queries comes down to three main steps: securely connecting your databases, designing efficient REST endpoints, and properly mapping your schemas. Breaking the process into these steps makes it much easier to manage.

Configuring Database Connections

The first step in any cross-database setup is establishing connections to your data sources. Configuration files, often in formats like YAML or JSON, are a great way to manage these connections. They keep credentials organized and make updates simpler without needing to modify your application code.

For each database, you'll need to define details such as a unique identifier, database type, connection URL, username, and password. For example, a YAML file might describe a PostgreSQL connection with the URL jdbc:postgresql://localhost:5432/homidb, username root, and password @Kolkata84. A second database, such as MySQL, would have its own unique configuration details.

Security is incredibly important at this stage. Always use SSL/TLS encryption for connections, especially over public networks. Store sensitive credentials in environment variables or secure vaults rather than embedding them in your code. Implement role-based access control to ensure each connection only has the permissions it needs.

"Open-source DB2Rest can allow your frontend to access your multiple separate databases where DB2Rest automatically exposes a safe and secure REST API to easily query, join, or push data records to store into your databases."

- Thad Guidry, Web Developer/Database Admin

Restricting schema access is another good practice. This limits which database objects and tables are accessible through your API. Many tools designed for cross-database operations include built-in security features, such as TLS-based API access and authentication options like token-based methods, OAuth, or basic authorization. Once your connections are set up, the next step is structuring REST endpoints to simplify multi-database queries.

Designing REST Endpoints for Queries

REST endpoints are the backbone of cross-database queries. To make them intuitive and effective, stick to established conventions while addressing the unique challenges of working with multiple databases. Use nouns to represent resources and standard HTTP methods to define actions.

Start with clear naming conventions. Use plural nouns for collections (like /orders) and singular nouns for individual items (like /orders/{id}). Stick to a consistent style, such as snake_case, across all endpoints. Path parameters can identify specific resources, while query parameters handle sorting, filtering, and pagination.

Your endpoints should hide the complexity of pulling data from multiple sources. For instance, an endpoint like /api/customer-analytics could combine customer data from PostgreSQL, transaction records from MySQL, and behavioral insights from MongoDB.

Performance is key here. Use pagination to handle large datasets and query parameters to filter and sort results, reducing the amount of data transferred. For example, an endpoint like /api/orders?status=completed&limit=50&offset=100 allows clients to request only the data they need.

Security should be a priority in endpoint design. Ensure all APIs are served over HTTPS, and use strong authentication and authorization methods, such as OAuth. Validate and sanitize user inputs to guard against vulnerabilities like SQL injection and cross-site scripting. Additionally, plan for API versioning early on. Methods like URL versioning (e.g., /api/v1/books), query parameter versioning, or header versioning help manage changes while keeping existing clients compatible. Once your endpoints are ready, the next task is mapping database schemas for a unified data view.

Mapping Database Schemas

The final step in setting up cross-database queries is schema mapping. This process reconciles differences between databases, such as varying data types, naming conventions, and structures, to create a unified view of your data.

Start by building a unified data dictionary. This document should list all columns, data types, constraints, and relationships across your databases, serving as a single source of truth.

Address data type differences carefully to ensure data integrity. Your schema mapping should handle conversions between formats seamlessly.

If you're using tools that support external table definitions, you can map tables from different schemas or with different names across remote databases. Clauses like SCHEMA_NAME and OBJECT_NAME let you create mappings that act as aliases, allowing your queries to reference data uniformly.

Indexing is another critical part of schema mapping. Create secondary indexes for columns that are frequently used in sorting or filtering. For high-traffic APIs, consider a hybrid approach - normalize transactional data while denormalizing aggregates or data-heavy entities.

Finally, plan your constraints thoughtfully. Ensure related columns across systems share the same data type, and use descriptive names for tables and columns to avoid confusion during cross-database operations. Often, this process involves creating external data sources with defined connection details, enabling seamless querying across multiple systems while presenting a unified interface to consumers of your API.

Best Practices for Cross-Database Querying with REST APIs

Once you’ve got your cross-database setup running, it’s time to focus on best practices that ensure your system stays fast, secure, and reliable. These strategies help you avoid common headaches while keeping everything running smoothly, building on the setup steps discussed earlier.

Optimizing Query Performance

Getting the best performance out of your queries starts with smart database design and efficient query structures. One of the most effective steps is indexing. By creating indexes on columns you frequently filter, sort, or join, you can significantly cut down on query execution time, especially when working with large datasets.

Another performance booster is caching. By storing frequent query results, you can reduce server load and speed up response times. This not only helps your application run faster but also creates a better experience for users.

Handling large datasets? Pagination is your best friend. Breaking down results into smaller chunks using techniques like limit and offset reduces memory usage and network strain.

To keep things running smoothly, load balancing is essential. Distributing requests across multiple servers ensures better uptime and prevents any single server from becoming a bottleneck.

You can also improve efficiency by minimizing round trips between the client and server. Batch requests whenever possible, avoid unnecessary API calls, and design endpoints to deliver only the data you need. Compression methods like GZIP can further reduce payload sizes, speeding up data transfer.

Finally, focus on query optimization. Avoid broad SELECT * statements and instead specify the exact columns you need. Use efficient filtering and carefully order WHERE clauses to make the most of your database engine’s capabilities.

Security Considerations

Once performance is dialed in, securing your cross-database queries becomes the next priority. Start with strong authentication. Use methods like bearer tokens, OAuth, or JSON Web Tokens (JWT) to verify users before granting them access to databases.

Implement Role-Based Access Control (RBAC) to manage permissions at a granular level. Assign specific roles to users, ensuring they only access the resources they’re authorized to use. This limits the risk of unauthorized data exposure.

Always encrypt data in transit to protect sensitive information.

To guard against injection attacks and data corruption, use input validation and sanitization. Validate incoming data against expected formats and sanitize inputs before processing them, especially when dealing with multiple databases that may have different vulnerabilities.

Rate limiting is another key practice. By capping the number of requests a client can make within a set timeframe, you can prevent abuse, mitigate denial-of-service attacks, and maintain system stability during high-traffic periods.

Store credentials and API keys securely. Avoid embedding them directly in your code. Instead, use environment variables or secure vaults to manage sensitive information like database connection strings and authentication tokens.

Lastly, conduct regular security testing. Run vulnerability assessments and penetration tests on your API endpoints to identify weak points. Keep all components updated with the latest security patches.

Error Handling and Debugging

Even with performance and security locked down, robust error handling is essential to maintain reliability - especially when juggling multiple databases. A structured approach can make debugging far less painful.

Use a consistent error format that includes clear messages, error codes, timestamps, and request IDs. This makes it easier to pinpoint issues without revealing sensitive details.

Comprehensive logging and monitoring are non-negotiable. Log all cross-database operations, including query execution times, connection statuses, and data consistency checks. These logs are invaluable when diagnosing performance problems or data mismatches.

Leverage HTTP status codes to provide quick context about errors. For example, use 400 for bad requests, 401 for authentication failures, and 500 for server errors. This allows clients to handle errors more effectively.

Cross-database operations often require distributed transaction handling. Use two-phase commit protocols or compensating transactions to maintain data consistency when actions span multiple databases. Plan for scenarios where one operation succeeds, but another fails.

To handle temporary failures, implement retry mechanisms and dead-letter queues. Use intelligent retry logic with exponential backoff to avoid overwhelming your system during network issues or high server loads.

Client-side validation can catch many errors before they even reach your API. While server-side validation is still critical, addressing obvious issues on the client side improves user experience and reduces unnecessary API calls.

When troubleshooting cross-database issues, take a systematic approach to isolate the problem. Check whether the issue lies with API calls, database connections, data processing, or network connectivity. Test each database connection independently before diving into cross-database operations.

Finally, keep a close eye on data consistency. Regularly monitor for synchronization issues between databases and set up alerts for any inconsistencies. This proactive step helps maintain data integrity and builds trust with users.

Using DreamFactory for Cross-Database Queries

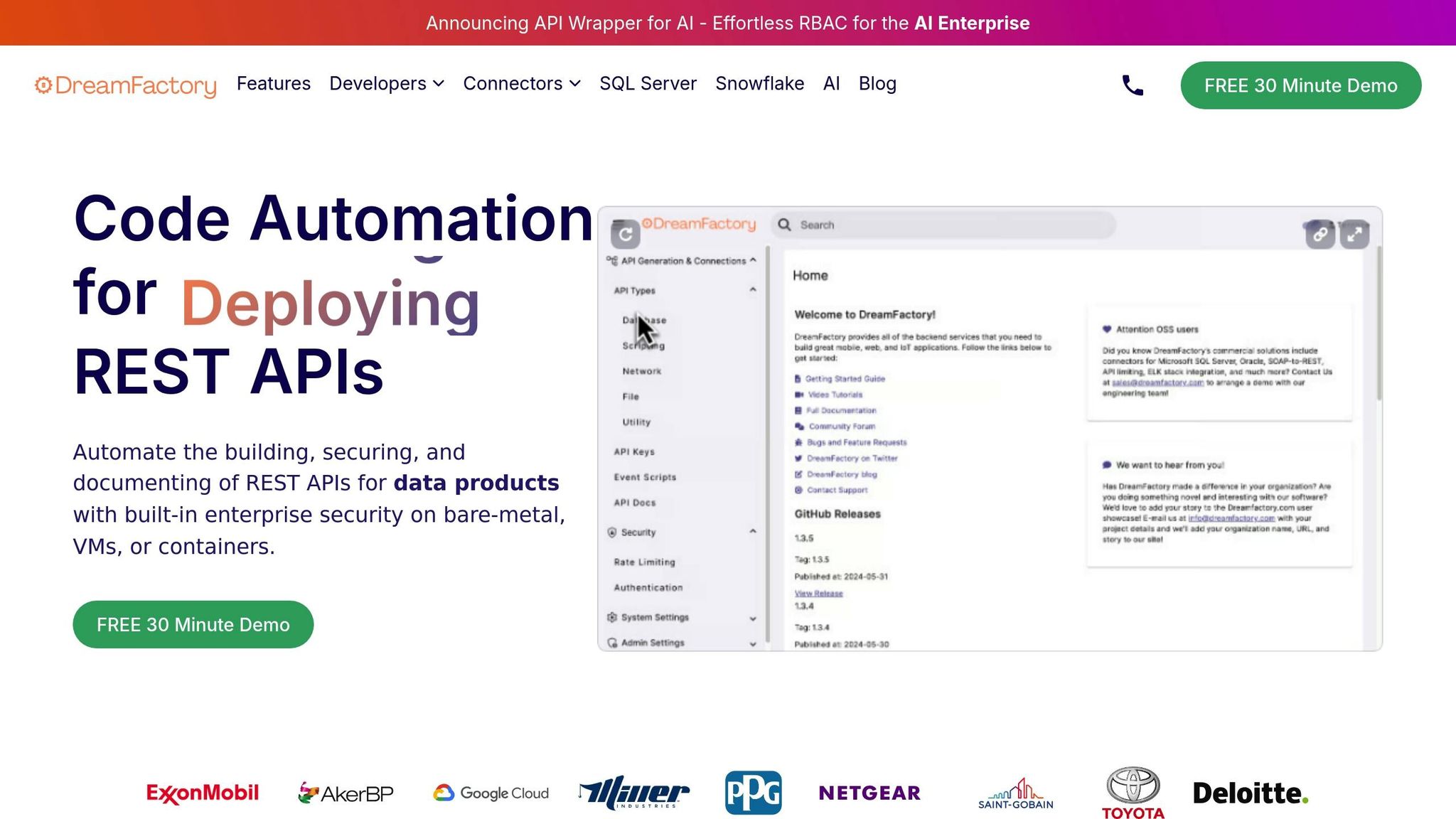

Setting up cross-database REST APIs manually can be a time-consuming process. DreamFactory simplifies this by automating API generation and configuration. By following best practices for setup and security, DreamFactory provides a streamlined, integrated solution for handling cross-database queries.

As Terence Bennett, CEO of DreamFactory Software, explains:

"At its core, DreamFactory is most useful for expediting development and decreasing time spent building APIs from scratch. By spending less time on the boring stuff, you and your team can focus on the more exciting projects that add significant value to your organization as a whole."

Automated API Generation

DreamFactory takes the hassle out of manual coding by automatically generating secure REST APIs for a wide range of data sources. Whether you're working with SQL databases like MySQL or PostgreSQL, NoSQL databases such as MongoDB, cloud storage solutions like AWS S3, or external APIs, DreamFactory handles it all from a single interface.

For example, creating a Snowflake API is straightforward: go to "API Generation & Connections" > "API Types" > "Database", select "Snowflake", and input the necessary details. DreamFactory's Snowflake Native App then generates RESTful APIs for any Snowflake table, view, or stored procedure. It scans the schema, creates the REST API, and even generates interactive Swagger documentation, making it easy to test and explore your endpoints.

DreamFactory also supports multiple system databases, including MySQL, SQL Server, PostgreSQL, and SQLite, for storing configuration settings. This ensures a seamless integration with your existing infrastructure while maintaining flexibility.

Role-Based Access Control (RBAC)

DreamFactory's RBAC system gives you precise control over API access. Administrators can define roles that align with organizational needs and assign detailed permissions for specific database operations, API endpoints, or even individual data fields.

For cross-database scenarios, roles can be tailored to fit different responsibilities. For instance, a "Financial Analyst" role might have read access to both a PostgreSQL sales database and a MongoDB customer database. Meanwhile, a "Customer Service" role might only access a limited subset of customer data. This granular control ensures users only see the data they are authorized to access. To keep security tight as your organization evolves, it's a good idea to regularly audit roles and update permissions. Using Policy as Code can help manage these authorization policies in a version-controlled and transparent way.

Server-Side Scripting and Customization

DreamFactory goes beyond access control by enabling custom business logic through server-side scripting. It supports scripting languages like Node.js, PHP, Python, and V8Js, allowing you to embed custom logic directly into API endpoints. This can include tasks like field validation, workflow triggers, and runtime calculations.

Scripts can be attached to API endpoints to handle requests before or after they are processed. For example, pre-process scripts can modify incoming requests, while post-process scripts can adjust responses before they are sent back to the client. This allows you to combine data from multiple sources - like merging customer details from PostgreSQL with order history from MongoDB - into a single, unified API response.

DreamFactory also provides "event" and "platform" resources within scripts, giving you access to system states, configuration settings, and the ability to trigger additional workflows. For instance, a post-process script might assign an Opportunity Rating based on deal probability or send an email notification when a large account is updated. Server-side scripting also enables you to transform API responses, handle errors, format data, and implement rate limiting to prevent misuse. This level of customization ensures your APIs can meet complex business needs while maintaining high performance and security standards.

Conclusion and Key Takeaways

Cross-database queries powered by REST APIs are reshaping how organizations access and integrate data from various sources. By standardizing database connectivity, REST APIs eliminate the hassle of managing different database protocols and connection methods. This means less time spent troubleshooting, fewer errors, and better data availability across enterprise systems. It's a practical, efficient solution for modern data needs.

Summary of Setup Steps

To get started, you'll need to choose systems that align with your specific goals. Consider factors like functionality, reliability, performance, and the quality of community support and documentation. Once you've selected your systems, focus on securing connections through proper authentication and carefully designing your data transfer processes. Adding data validation mechanisms is crucial to ensure that data formats, types, and ranges remain accurate during transfers. Equally important is creating strong error-handling methods to address potential issues like network disruptions, API rate limits, or connection failures. These steps - secure connections, data validation, and robust error handling - form the backbone of reliable cross-database API operations.

Benefits of Using DreamFactory

DreamFactory simplifies API deployment by automating the process of creating secure APIs. Instead of dealing with complex coding, DreamFactory generates APIs by default, enforces API key access, and offers role-based access control for added security.

The time savings are significant. Mark A., a user of the platform, shared:

"Will actually never need to build an API again... Fastest and easiest API management tool my department has ever used. We used DreamFactory to build the REST APIs we needed for a series of projects. And were able to save tens of thousands of developer hours it would have taken otherwise."

DreamFactory's effectiveness is backed by its 4.5 rating on G2, highlighting its ability to simplify REST API generation, streamline integrations, and maintain strong security. With support for over 20 connectors - including Snowflake, SQL Server, and MongoDB - it ensures compatibility across a wide range of database environments while maintaining consistent security and documentation standards.

Next Steps

Start by identifying your current cross-database challenges and pinpoint areas where integration could have the biggest impact. DreamFactory's automated API generation can immediately address manual coding roadblocks while delivering enterprise-grade security.

Consider running a pilot project with one or two database connections. Focus on use cases where security, scalability, and interoperability are critical. This hands-on experience will help you understand the platform's capabilities and build confidence within your organization.

FAQs

How do REST APIs make cross-database queries easier and more efficient?

REST APIs make querying across multiple databases much simpler by offering a consistent and adaptable method to access data, no matter the database technology involved. Instead of wrestling with complicated direct connections, REST APIs allow for smooth data integration across various systems. This approach helps break down data silos, enabling organizations to combine and use information more effectively, which can lead to smarter decisions and more efficient workflows.

What’s more, the stateless design of REST APIs ensures they can scale easily, even when handling large numbers of requests. This means you can maintain performance without a hitch. Compared to older methods, REST APIs provide a more efficient, secure, and reliable way for databases to communicate, making them a key tool for modern, data-driven applications.

What security measures should I follow when setting up cross-database queries with REST APIs?

When working with cross-database queries through REST APIs, keeping your data and systems secure should always be a top priority. Here are some essential steps to help safeguard your setup:

- Implement strong authentication and authorization: Use methods like role-based access control (RBAC), API key management, or OAuth to ensure that only authorized users can interact with your APIs.

- Encrypt data during transit: Protect communication between clients and servers by using TLS (Transport Layer Security). This ensures that data remains confidential and safe from interception.

- Validate and sanitize inputs: Prevent SQL injection and other malicious attacks by thoroughly checking and cleaning all incoming data before processing it.

- Monitor and log API activity: Keep an eye on API access logs to identify suspicious behavior or potential security issues. Regular audits can help you respond quickly to any incidents.

By adopting these practices, you can significantly strengthen the security of your cross-database queries while maintaining system reliability.

How does DreamFactory make it easier to create and manage REST APIs for cross-database queries?

DreamFactory takes the hassle out of building and managing REST APIs for cross-database queries by automating API creation directly from your databases. With compatibility for over 20 database connectors - like Snowflake, SQL Server, and MongoDB - it enables you to pull data from multiple sources and combine it effortlessly through a single API endpoint.

Beyond making integrations easier, DreamFactory prioritizes security with features like role-based access control (RBAC), API key management, and OAuth support. This approach not only cuts down on development time but also simplifies the process of securely handling cross-database queries in various environments.

Related Blog Posts

Kevin Hood is an accomplished solutions engineer specializing in data analytics and AI, enterprise data governance, data integration, and API-led initiatives.

Blog

Blog